Generated voices from training data always garbled.... but works fine using tortoise-tts-fast ... (?) #113

Labels

No Label

bug

duplicate

enhancement

help wanted

insufficient info

invalid

news

not a bug

question

wontfix

No Milestone

No project

No Assignees

6 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: mrq/ai-voice-cloning#113

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

http://sndup.net/dxm4 = Mrq version

This is what my training data (training done with the mrq repository) sounds like if I generate the speech using the mrQ based generation UI. This is pretty much the best outcome I've had. This is from 200 epoch training data, but I've tried with everything up to 9000 epochs and it's the same garbled outcome.

Someone suggested trying tortoise-tts-fast to generate instead.

Using the same training data .pth file.

https://sndup.net/jkhc/ = tts-fast generator (https://github.com/152334H/tortoise-tts-fast)

... and it actually sounds like it's supposed to! By far my best result. It even seems to have the right accent.

Which makes me now ask... what's the issue with the tts generator in this repo? I can only assume I'm doing something wrong, but I've tried every temperature from 0.1 up to 1.0, and I've tried ultra-fast, fast, standard and high quality.

That's strange. Can you send over that model for me to test against?

I can't imagine I broke anything. The only thing I can think of would be it somehow not actually using the model for generating.

Generated voices from training data always garbled.... but words fine using tortoise-tts-fast ... (?)to Generated voices from training data always garbled.... but works fine using tortoise-tts-fast ... (?)The pth file? Sure I can

https://nirin.synology.me:10003/d/s/shjzhza68dqHiOpbkfV7N1fQmtcCXkg4/-RMnQPcvC_fJhQEhSL2fxTV4MlYdIHfb-b7GAd1L5Rgo

and for tthe sake of sanity I have also added screenies of the generator page and my settings page, showing that I do (I think?) have everything set up correctly

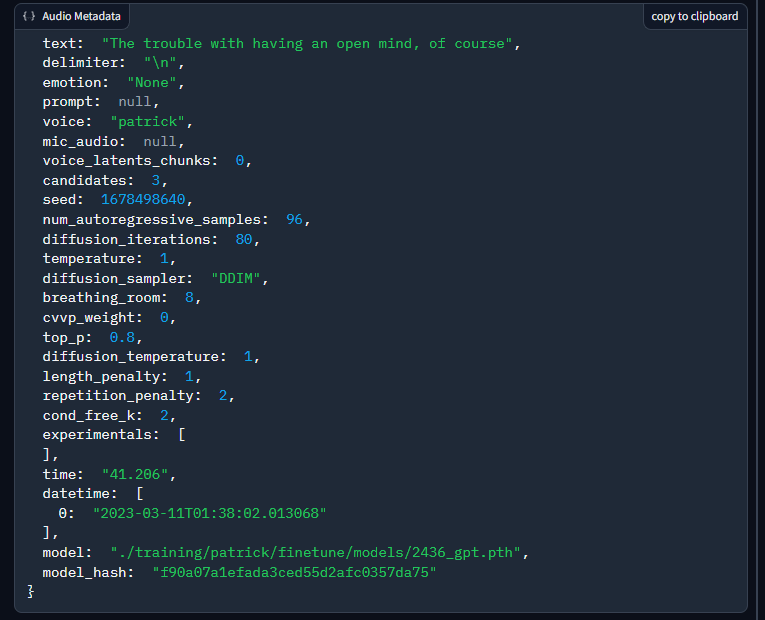

Oh right, I can actually check the settings for the one generated through AIVC:

The only thing I can think of right now is that it's not using Half-P and Cond. Free (ironically, the settings that I forgot to fix last night). I doubt those are the culprits, but I just did a test on a model and it sounded terrible, but it's probably just from the model I tested being terrible anyways, later generations sound better but not right.

Oh you beat me to the punch. I'll test the model. I doubt I caused a regression between yesterday and today.

I have done training on various versions of the mrq repo over the last few days (I tend to update whenever you say you've fixed something haha) but all of my tests have been garbled like this (usually even worse than the example I gave above).

I don't know what's different with the fast-tts generator, but it seems to be picking up the accent (which seems to be the main issue with untrained voices) and isn't at all garbled etc.

But yeh, it's not that the mrQ repo has broken today, it's literally never worked for me (so the last few days of various versions and updates) to generate a legible/understandable voice.

Oh, there's one other difference between the tortoise-tts's: how the latents are calculated. I don't recall seeing the other tortoise saving latents, so there won't be an easy way to transplant from fast. I think you can load them fine for fast. I'll need to check the code again.

I'll return with instructions on how to go about it in a few minutes.

Thanks for looking into it. I would much prefer it if I could do the entire process within your repo version, as the gui and everything is so much nicer to use (it took me ages of struggling to get tts-fast working earlier for testing, as whoever runs the repo has a bunch of broken instructions -.- )

Yeah, it looks like the you can just copy

./voices/patrick/cond_latents_f90a07a1.pthfile and just paste it into the fast repo's voice folder (I'd suggest a new voice folder, just to make sure it does load only that). If it sounds terrible, then the culprit is how the latents are being computed.Regarding latents and "Leveraging LJSpeech dataset for computing latents," does that mean that the latents are sensitive to the quality of the generated dataset by whisper/whisperx/whispercpp? So theoretically better processing with larger whisper model (even on cpu) would produce higher quality latents? Whisper in its various iterations doesn't take too long to run on reasonably sized datasets, I'm sure most users wouldn't mind spending the extra few minutes generating data with whisper if it means the latent quality increases.

Not so much the quality of the transcription itself, but how accurate the segments are. Naturally, smaller whisper models will not segment the audio as nicely as the larger models.

In theory, yeah. I haven't so much tested it with lesser quality segmentings.

The only caveat is VRAM consumption for whisper/whisperx for the large models, or normal RAM consumption if you manage to set them to use the CPU only. whispercpp has less egregious RAM requirements; I think even its large model consumes ~5GiB, I think half the size of the large models for whisper/whisperx. For a dataset of about ~200.

Although, it's just in theory. As I said I haven't tested transcriptions on anything less than large-v2 for the dataset-hinting.

I deleted all the voice folders and put the conditional latent file in the voices folder, and the generated speech was garbled (much like what mrq version does).

I then kept everything the same, but changes the voice file pointer to the patrick source voices folder in mrq repo (still using the same AR model path 2836.pth file) and it output a proper speech with accent.

So I guess the mrq latents are the issue (?)

I suppose so. I guess my fancy magic super duper accuracy boost is flawed in some cases.

If that's the case then, you can always manually set the voice chunk size to something to avoid it from leveraging the LJ dataset. Don't got a good number, but something like 8 never hurts.

That is to also say, I might need to have it use the default behavior too, if it works better in some cases, since I believe the fast repo by default uses the old behavior (use the first 4 seconds of each sound file). I'll assume you haven't touched that setting for the other fork.

I'm actually using this repo for it - https://github.com/152334H/DL-Art-School

but I think it just uses the default fast-tts repo, just with a gui on top.

I'll try yours with voice-chunk = 8 (instead of the default 0)

Should I be using half-precision and conditioning-free in the experimental settings?

edit - Tried voice-chunk 8 and 12, but both ended up with

"CUDA out of memory. Tried to allocate 1.19 GiB (GPU 0; 24.00 GiB total capacity; 22.03 GiB already allocated; 0 bytes free; 22.30 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF"

For sure enable condition free, half-precision I'm still iffy on. I feel sometimes ever since BitsAndBytes was crammed in, it sometimes does boost quality a bit, but I feel it's placebo.

Which reminds me, I need to fix cond-free not defaulting to being on.

I guess you have too large of a dataset. Bump it to 16 or 24, or however high it needs to be.

I just tested a model that had terrible output, and I manually set it to 24 chunks and it started to sound better, so I guess it is how latents are being calculated. Oops.

had to put chunks up to 32 to avoid out-of-memory errors (seems even 24gb of vram isn't enough these days lol).

But I am now getting actual accented voices out of the generator!

I wonder why your fancy accuracy boost doesn't work for me?!

It might have to do with sound file length. If there's even one really long sound file compared to the rest, it'll cause all other sounds to pad to the largest length to easily match. It could be from that, and a lot of latents are using dead air.

If you're ooming while generating try lowering your Sample Batch Size in settings.

It's possible your fancy magic super duper accuracy boost is correct, but it is the whisper transcribe and dataset that is wrong. Personally I am getting audio chunks that are cut off too early and I don't even know how to manually correct all of it. I can manually edit wav files, but without remaking whisper.json, wouldn't it make the latents even more broken? Does whisper.json even matter for this?

Ideally whisper would get it perfect every time. But if it can't, then whisper -> human -> latents generation based off dataset would be ideal.

It's OOMing when generating the latents. Low latent chunk counts with a very large dataset will OOM since it's using larger batches.

WhisperX is better at segmenting since it does forced alignment blahblahblah, if you aren't already using that.

whisper.jsondoesn't get used at all, it's just an output file that I may use later in the future (probably never, but it doesn't hurt to retain it).You're free to manually edit and re-trim them, even after transcription for training. The only magic it is for latents is to pad every segment to the largest segment size, and set the chunk size to the dataset count.

The thing is I have very little to complain about whisperx transcribe process. It is the splitting the audio up into chunks that is sus. I've seen on https://github.com/m-bain/whisperX that they have a lot of features which we aren't using right now, sadly some of the models require a huggingface token to be functional, but perhaps some of the extra features could help with the splitting of the audio work.

ahhhh... I dooooo have a sound file which is 1hour+ because it's a narrated audiobook file (with the bad bits snipped out, but still kept as one single file).

I was going to try just using the whisper-cut-up files as the voice files, but someone suggested not to do that because they might cause issues from being too short!

desu I don't think either VAD or diarization are necessary, as if your samples are that dirty, I don't think you should try and use them for either generating latents from or finetuning with:

I could really, really be wrong though, as I am not an expert.

That'll do it. If it's in

./training/patrick/audio/, then it'll try and pad every other segment to an hour+.Which I managed to finally catch and work around. DLAS has a hidden minimum sound length that, for some reason, won't mention. The transcription process should throw out anything that would make the training process unhappy, so you're free to segment then with the transcription process.

Oh, no, that file is in the voices/patrick folder. In the training/patrick/audio folder its been cut up by whisper into a bunch of short files.

Maybe one of the supposedly 'short' cut up files is iffy, and longer than it should be... I will check. If I delete an audio file, do I just need to remove it from the train.txt file? or will it need to be removed from other places too?

Can we make the cuts for whisper?

Say I take my 1 minute source, and cut it into sentences. Can whisper then take each sentence and not cut it further? That's what comes to mind when thinking how to stop whisper from cuttin off mid sent

Ah, then it shouldn't affect it, as the "leveraging of the LJ-formatted dataset" thing will source its sound inputs from the

./training/{voice}/folder itself, rather than what's in the voice folder.You can simply just remove the line from the text file itself. The process reads from the

train.txtfile and load the voice samples from there.I'll need to read up if whisper has a flag for it. If not, then I suppose I can implement it myself by stitching fragments to form sentences instead.

I don't know if its good or not, just brainstorming. This will be awful for long files. Although theoretically, if whisper can play nice with what it is given, then another tool can do the cutting.

It should be good, my main issue with whisper is that I'm seeing a lot of single words that get segmented off. I just need to also evaluate how intrusive it'll be to implement it.

So I just did a fresh transcribe using whisperX and LargeV2.

The longest file is 15s, with a couple 14s and a bunch of 9s and 10s.

They then ramp down all the way to 1s.

But there is also a lot (158 out of 1343 files) which are 0s. Some still have a single word or two in there, but there's a bunch which seem to be completely empty (they probably have a single-word in the transcription but I can't hear anything)

oh most of those files seem to be in the 'validation.txt' not in train.txt. Not sure what that file does.

I see https://github.com/m-bain/whisperX/blob/main/whisperx/transcribe.py has no_speech_threshold: Optional[float] = 0.6,

no_speech_threshold: float

If the no_speech probability is higher than this value AND the average log probability over sampled tokens is below

logprob_threshold, consider the segment as silentMaybe needs tweaking or to be user customizable?

Why does whisper even need to splice the audio up? If you're making a tv series you want 1 line per subtitle, but we don't care about any of that. We just want it to fit in the memory. Hell, computing latents should be an infrequent event. We already have an option to do it on cpu. It doesn't even have to fit in vram, just ram. I bet many users' use case the entire voice sample would fit in one go, along with the whisper model.

I do think it could only be an improvement if there was an automated way to remove any transcribed clips that are below a certain length, as most of those are half-words or weirdly cut off. Not sure how easy/possible that is to implement in the mrq gui.

Is the length difference in my files (0s up to 15s) enough to be the cause to my garbled latents do you think?

I can't imagine 0s files being anything other than poorly cut off. If you have enough data then I'd drop the worst part of it.

Yeh I agree, though that's why I mentioned it would be nice if this was able to be automated... as I'll have to manually remove ~150 entries from the text file lol.

~11% might significantly be enough to throw off latents.

The validation dataset is a list of samples that get culled out from the training dataset for being under a certain length (default 12 characters), and can be recycled to run validation if requested during training.

You'll probably want to re-generate your dataset after making sure AIVC is up to date, as it'll check for short sound files (and not short transcriptions) to cull completely.

Having one giant long text string and one giant long voice file is a very, very bad thing for training.

I'm frequently regenerating them when I'm comparing between checkpoints for a model, then scrapping the model entirely to retune from scratch.

Before having it source the segmented transcriptions, I was also constantly toying with the chunk size to find close-to-the-best setting for a model, finetuned or not.

It's slow on CPU, believe me. When I was running it on DirectML on my 6800XT instead of CUDA on my 2060, it was a huge pain even on smaller counts. It adds up a lot too when it's tiny segments.

No way. From what caught wind back to me from /g/, there were a substantial number of posts about OOMing from generating latents because of how I was handling the default. I think it was a naive way of using the file count, where people just had one giant voice file; they don't know better, my original documentation from when it was just a rentry OK'd it, since no one knew exactly how latents were handled. Even after modifying it, I still OK'd it by just having a chunk size cap.

It did before for the past few days if you did culling-to-the-validation-dataset-based-on-text-length. As of a few hours ago though, it'll ignore very short clips (I think it's 0.6 seconds, as what DLAS will throw errors for anything below that).

Just run the

Prepare validationin theTrain>Prepare Datasettab. Play around with the cutoff value to see how many lines do get removed.If I have the brain juices left I could probably whip up a setting to do it by sound length.

Ahh I see, yes, but that still leaves the short audio files in the folder. It would be nice if the short files were deleted as part of the culling, as I assume because they remain in the folder they will still be used for the latents calculations (which may be part of the issue I'm having).

Though it seems that with 1000 files in the dataset, with only 1 being 15s and the majority being 5s or less, there will always be a lot more 'empty space' in the padded files than actual words.

So I'm trying again, with a fresh training session, but now I seem to be getting the groany/garbled generated voices on both mrq (using manual voice chunks) AND in fast-tts lol. So this time I assume the issue is the training...

I'm using a pretty small dataset, which probably doesn't help.

Screenshot the graphs from it. It was a pretty quick training, only 100 epochs at 0.000085.

So now I need to figure out... did I train too fast? Or did I not train for long enough? Or... did I train for -too long- (if training for too long is even a thing?)

I went back and tried one of the saved-state pth files from about epoch 40 and it was still garbled, so it doesn't seem to be a too-long issue.

I'll try it again tomorrow, and double check all the training files it's using.

The biggest problem I'm having when it comes to transcription, even when using WhisperX, is the transcribed text having a full sentence, but the audio having the first or last word or two cut off. I don't think the transcription timestamps are accurate enough to cut the audio apart properly.

I'm using clean audio files too; they're roughly 5-minute long compiled clips of ripped voices with virtually no background noise.

Same here. I usually run the dataset preparation, then check the files manually, then fix the ones where audio starts or cuts short with Audacity (by re-cutting the original file).

That only works on small datasets.

It doesn't look like it's a constant cut off either, sometimes it cuts the start of the audio sometimes the end, sometimes it's fine.

Well shit.

I just transcribed some more datasets with whisperx+large-v2 and they're consistently cut off too soon at the end. I compared them against whisper and whisper does a better job at that. I genuinely don't know how my processed datasets were fine earlier, unless I actually wasn't using whisperx for those.

I'll see what I can do to fix it. I added a button to disable slicing and a setting to cull too short sound files for using them for validation purposes during training. I'll push those in a moment.

Pushed commit

2424c455cb. It seems every passing day I regret more and more adding whisperx.I'm very, very tempted to just remove it. It caused nothing but trouble.WhisperX is gone. I'm done with it.I had this same issue from day 1 with some of my transcripted files ending a word earlier than they should... but I didn't think it was a whisperx problem, I thought it also happened on the original whisper? Maybe I was mistaken.

What's the best setup to use now?

It's the same on whisper for me

Based on going through most of my voice samples, normal whisper's timestamps do have minor accuracy problems some of the time, but not anywhere near as whisperx both consistently doing it and egregiously doing it.

desu there's no real point in keeping whisperx around when the main point of it was better timestamps for segmenting, and it fails to do that so, so bad. It's just not worth the headaches it keeps bringing.

I think it'd be neat to use if diariazation, but that requires a HF token, and given how terrible the timestamps are, I don't trust it all that well.

Ideally:

In short, if you don't need to segment, don't. I have it not slicing by default now, but you still can by either ticking the checkbox when transcribing, or clicking the slice button.

whisper.json, finally giving it a use.https://github.com/openai/whisper/discussions/435

This seems to be the most recent discussion involving a fix for the innaccurate timestamps in whisper.

Otherwise, as you suggest, I may just DIY it.

I assume I can just load the file into an audio program, and find the exact timecode in seconds, and edit the whisper.json. Then re-slice.

The issue does just seem to be that whisper only slices in whole-integer seconds. Even though all the entries are to a decimal place (13.0, etc) they are never "12.3".

edit - https://github.com/openai/whisper/discussions/139

This discussion seems to confirm this, but also mentions it might be a bug with the large dataset... though other people also weigh in with reasons (and fixes, but I don't understand most of those)

edit2 -

Somehow I have managed to get one of my datasets to transcribe with actual decimal timestamps (down to like "14.233s" so it gets pretty accurate) and it seems at first glance to be pretty accurate to the text. This was using a medium whisper.

However I also tried medium on another voice, and it still only does it in whole integers. So ... no idea wtf.

edit3 -

Even though I got a timestamp set which was into the 14.333 decimal places, they still weren't accurate. I even had some transcriptions which were about 20 words long, but the audio clip was only 10. The rest got spread into the next few slices making a bunch of incorrect clips.

I think I'll do my next test of training using manually-created slices..

Yeah, that'd be the path of least resistance when manually slicing them: open it in

AudacityTenacity, manually deduce start and end times, edit thewhisper.json, then re-slice.I see, that might explain some of the inconsistencies I saw between things getting timestamped fine-ish for me, since the stuff I would transcribe on my personal system are with the medium model. I peeped at a transcription from the large model and they're mostly whole numbers (they're coerced to decimals when stored as JSON because I suppose that's how python's serializer works)

Yeah, that's probably for the best.

I've added blanket start/end offset options when slicing, and it seems pretty favorable, as I re-processed my Patrick Bateman samples and they mostly only need the start times offsetted back by 0.25 seconds.

I might add in a way to playback parsed sample pieces, so I don't have to keep transfering off my beefy system to my personal system.

How feasible would it be to run sliced audio through Whisper a second time to see if the transcription matches the sliced audio? If the re-transcription doesn't match, you can throw out that slice.

mm, I suppose that could be one way to automatically check if segments aren't trimmed too much. I could then dump the failures into another text file to narrow down what's needed for manual intervention.

The only qualm is the additional overhead; large datasets already take quite the time on even the medium model, although I'll say if you're using a large dataset you better damn right have your samples segmented.

I'm struggling to understand the parameters of the problem here.

Assume we have a perfect transcription.

If we start off with a single long dialogue wav, where would we ideally want it cut? Every sentence? As close to but under 30 seconds (internal whisper constraint)? As high as memory permits? Or would it not matter at all? Do we need X number of audio files to train using batchsize X?

If audio transitions are a point of failure, would we want as few of them as we can get away with? How few can we get away with, and what are we constrained by?

I'm assuming some information is carried over sentence to sentence, which makes me think every time we cut a consecutive dialogue we lose out on some information.

And alternatively, if we start off with many short wavs of isolated dialogue, perhaps as short as a few words each, would we always necessarily want to use them as is and change nothing, since there's no meaningful way to gain information by stiching them together? At that point we just want to transcribe and no cutting / stiching whatsoever.

Whisper use case was not necessarily model training, and we should be careful about assuming just because whisper does something a particular way, that it is what's best for our purpose.

Segment by every sentence, if:

./models/tortoise/bpe_lowercase_asr_256.json), it's under 402 tokens, as defined in the training YAMLand if it's too long, then I'll assume by clause, which is about what whisper would like to segment by (if it's not outright segmenting off the last word).

In terms of pure transcription, I don't think this is actually a problem, as I've had adequate transcriptions from everything-in-one-sound-file voice samples (such as the Stanley Parable narrator, and Gabe Newell) in my cursory tests.

You're neglecting how the original LJSpeech dataset is formatted: it's fairly blasé on being consistent. Some lines are multi-claused sentences, while others will split by clause, and some are just short sentences. For example:

I don't think sticking by a strict "every line should be the same length" would help at all with training the AR model. And in my experience, I've got decent results so far with base whisper blasting away giant blobs of audio.

It's just that whisperx has had egregious timestamp problems; I'm fairly sure it's been the (other) source of why I've been getting trash results after switching to it (the other being not matching unified_voice2 in DLAS closer to the autoregressive model in tortoise).

As long as clauses are intact, it shouldn't really matter.

Although, LibriTTS (what VALL-E was originally trained with) seems to be split by sentences wholly, and not just clauses, for what it's worth.

I'm actually looking at the transcriptions I've put on my huggingface repo for finetunes and my God, I must have really been pressed for time as they're either awfully segmented too much or they're not segmented enough. Yet, for James Sunderland at least, those two performed really well. So I'm not too sure if it's fine for the text itself to not be up to par, as long as the audio is relatively intact (James v2 has some trimming issues, however).

I agree whisperX got segmentation wrong, which undoubtedly led to troubles. It did well on multi-lingual transcribing but that's beside the point.

What I'm wondering about is how to get segmentation right. It's surprising to hear that sentence by sentence is okay, since whisper has condition_on_previous_text which according to them:

(It is merely used for transcribing, which I find weird.)

Human speech carries over sentence by sentence, just like it does token by token. I'm not saying every dialogue needs to be multi sentence long, sometimes humans say one line and that's okay. Perhaps our ideal data needs to capture a varied sample of multi line dialogue as well as single sentences. What I'm trying to figure out is what that ideal is. My impression is that single sentence audio segments has more to do with whisper being focused on transcription and less with single sentences being best for latent generation (Edit: and model training ofc).

As an aside, but its part of my ongoing journey to clean up my training -

My outputs are now generating some fairly decent speech, even with very small data sets. However the output audio quality is a little... low quality? Basically kinda sounds a bit like old radio sometimes.

I tried voice fixer but weirdly that seemed to make it worse rather than better.

Is there an obvious way to improve the audio quality on a generated voice that I'm missing?>

I've been curious, often voice samples get put through a background noise removal process. The damage to the voice is typically invisible or minimal to human ear, but I wonder if it's substantial enough for the fine tuning process to pick up on it during training. Would it be beneficial to put the voice samples through some vocal regeneration model prior to sending them to tts for training? Of course naturally clean audio would be ideal, if only we would be so lucky.

I forgot I was too fried over the weekend to respond.

In the scope of segmenting for training, segmenting is only actually necessary if the main text is over 200 characters or the main audio is over the 11.6s requirement imposed by the training YAML (which I imagine is necessary due to how many mel tokens are within a sequence in the AR model).

I imagine 2+ sentences per line is a challenge moreso from having to keep it within the restrictions the model/training configuration imposes.

If your dataset specifically has a sentence where one depends on the other, then by all means, keep it in one file (as long as it doesn't exceed the limits).

As for that, I imagine (I don't know for sure, but given how robust GPT2 is, this is probably true) latents are only really calculated running mel tokens through the AR, and inferencing the latents within a token string.

I'm sure the original issue here was just from an oversight on my end, rather than the AR model itself being trained wrong (which is still a possibility given the over reliance on liberally timestamped segments).

You probably were still on the version before I fixed the generation settings not persisting (to keep Condition-Free enabled). I had some test generations that improved a lot after realizing it kept disabling itself.

desu I'm not too sure if it matters all that much, given that the waveform is pretty much compressed so much into a mel spectrogram.

Per the tortoise design doc:

I'm sure any (very) minor noise or imperfections will get filtered out by compression, and cleaned up from the diffusion/vocoder.

My only doubts is that I don't have many datasets that do have background noise. The only one I can think of is of Patrick Bateman (and whisper doesn't like to trim those out for segments), but I don't think I had any perceptable quality problems at the end of the chain with inferencing.

However, """imperfections""" (and I say that loosely, I'd rather classify them as quirks) like with Silent Hill voices do get maintained.

Anyways, the last thing I need to mend is how I'm "leveraging" the dataset by making it actually leverage the dataset, rather than something like "I see you segmented your audio, so I'll make some loose assumptions about the maximum segment length to derive a common chunk size".

Aside from that, the general outline of preparing a dataset is (and I should add this to the wiki):

whisper.jsonfor later reuse.A lot of it should be fairly hand-held, but the biggest point is to double check the end results (which I didn't do much of).

These sanity checks seemed to help my Japanese finetune actually sound almost as nice as it did originally before I added all the bloat ontop of "just transcribe these lines please and thanks".

However, while transcription will also handle the slicing (if requested) and the dataset creation, you can always re-run the other two steps (slicing and dataset creation) after the fact. They don't usually take so long so it's easy to just have them done after transcription automatically.

Just to clear up my understanding: The recommendation now is to not use all-in-one files and instead make sure the audio clips post-transcription are always under 11.6s?

Correct.

It's not the end of the world if you can't, but Whisper's timestamps aren't to be trusted as much as I had hoped.

Ideally you want them under 11.6s pre-transcription.

Again, it's not the end of the world if you can't. The web UI will handle implicitly segmenting anything too long (>11.6s, >200 characters), but as I keep stressing, double check how well they were segmented.

Anything that gets implicitly segmented, or outright thrown out, will be outputted to the output area on the right, but I've yet to have anything egregiously decimated (or at least, in a way that is noticeable outright).

I figured I'll (ashamedly) mention it here, since this issues thread was the reason why I removed it in the first place: I added WhisperX back. It won't install by default to avoid any more dependency nightmares, and you need a HF token anyways to make the best use of it.

I gave it another shot and I admit I was wrong to axe it. It just took a little elbow grease from the rather rudimentary implementation to really see the uplifts it brought:

-0.05and0.05for start/end offsets literally fixes 99% of the remaining problems.I'll do additional sanity checks, but it seems with WhisperX, quality slicing isn't a crack dream.

It's not a big enough change to necessitate retraining finetunes, nor to retranscribe datasets, as the principle still applies: if you can have your audio clips trimmed right ahead of time, do so, don't rely on this. However, if you need to, it won't kick you in the balls trying to get accurate timestamps. It's as close to perfect as I can get it.