VALL-E Integration (and In Response To TorToiSe: a Quick Retrospective) #152

Labels

No Label

bug

duplicate

enhancement

help wanted

insufficient info

invalid

news

not a bug

question

wontfix

No Milestone

No project

No Assignees

27 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: mrq/ai-voice-cloning#152

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

As I may have hinted with my not-so-subtle commits, I'm working towards getting VALL-E integrated as an alternative TTS backend:

--tts-backend="vall-e"I'm backing this implementation as my VALL-E implementation:

The training process is pretty intuitive too:

However, I have some qualms with it so far:

And other concerns with VALL-E:

As for my thoughts on TorToiSe after everything from this being a silly rentry to using it, to now:

Above all, I just hope VALL-E proves to be my magic cure-all and I can just set up a machine to train LJSpeech or a reduced LibriTTS dataset, and come back to it after quite some time passed to get a good model. I honestly don't know how much steam I have left in me.

tl;dr: VALL-E soon, stay tuned.

How bad is it? Is it still something that could run on HEDT graphics cards or should I be pricing out refab P40's on eBay?

Edit: Should rerunning setup-cuda.sh be sufficient to pull in whatever's required for VALL-E?

My batch size is pretty much pinned to 16 for my 2x6800XTs (2x16GiB) if I want stability. Granted, distributed training is different from DLAS, where DLAS will take your batch size and divide by GPU count, but DeepSpeed will use the batch size per GPU. I'm not sure of the bare minimum requirement, though.

Also, you can train half/quarter sized models with reduced parameters by specifing

-halfand-quarterin the model name (soar-half/nar-quarter) for reduced VRAM requirements.Small improvement, but if you've already committed to relying on

phonemizerthen using it to generate the IPA vocab list from the training dataset is near trivial:Edit: In the majority of cases

phonemizeris just acting as a wrapper for libespeak-ng so you could just call espeak_TextToPhonemes() yourself if you wanted.Your doing a amazing job. This mainly beyond my understanding but it's impressive stuff. Do you have a donation page or something?

I've tried training unofficial enhuiz Vall-E implementation but with my resources I wasn't going anywhere unfortunately, so I gave up.

Have you got any success in training it?

I think that it is a shame though to abandon Tortoise, I've been experimenting with lots of TTS in these past months and the quality of Tortoise is the best to me.

It has its problems: it's really slow, it is unreliable/unstable sometimes, with very strange noises and repetitions and outputs of zero seconds sometimes. But it is very remarkable when it works well, the best by a mile among what I've tried.

I think we should 'just' find a way to fine tune in a stable way without losing the zero shot multispeaker capability.

I have an idea, but I'm not that good in programming.

When we fine tune we lose the multi-speaker zero shot capability and we degrade the reliability of the original model, at least it seems to me. I have seen in image generation this model called ControlNet which allows conditioning on additional input modes other than text.

For example, you guide the image generation not only with the text prompt but also with whatever representation you want. For example you guide the input with a heatMap, with edge contours etc..

They don't want to train a new generative high quality text to image model, they want to leverage the high quality established stable diffusion model. They also don't want to fine tune and unfreeze the stable diffusion weight as this might lower the output quality, overfit over the small dataset or increase instability in the output.

So they actuate a smart strategy for which they use a hypernetwork (which is like a mirrored version of a part of the stable diffusion) whose activations are added to the activations of the stable diffusion model.

The stable diffusion model is freezed, and only the hypernetwork is trained.

Controlnet is just for diffusion image generation models, but in reality it proposes a new way of fine tuning which should ease the process and make it stable, while retaining what the orginal model has learned during the original training.

It would be nice to apply this idea to Tortoise fine tuning.

Here's some reference:'https://www.youtube.com/watch?v=fhIGt7QGg4w' this video talks about the more general idea about ControlNet.

I hope I can spark the creative idea of someone more skilled than me.

Having said that, I'm very curious about Vall-E as well.

I'd like to advise against using https://github.com/enhuiz/vall-e and would rather propose to take a second look at https://github.com/lifeiteng/vall-e

The enhuiz implementation seems dead, the author is unresponsive and going by the open issues there seem to be problems with the training process, with multiple people reporting it producing garbage results.

The biggest gripe here is that the author has gone completely silent to any queries or other questions regarding the implementation and has been seemingly absent for over two months.

The perceived quality of the code is irrelevant, if we can't guarantee the correctness of the code in the first place.

In contrast to this, the lifeiteng implementation seems to be actively managed, has the author chime in on issues and discussions and, most important of all, was able to present some promising results so far: https://github.com/lifeiteng/vall-e/issues/53

Considering the lhotse + k2 + icefall dependencies, I agree, they are certainly cancer, but they are only used for the dataset preparation. I am sure it should be possible to reverse engineer the process so far, to just be able to prepare our own datasets for the training process, instead to rely on the supplied recipes.

That being said, I managed to get the LibriTTS training process running on my WSL2 Arch Linux on a 3060 12GB (though it was only out of curiosity, so I never let it train for any amount of time), and the author managed to get promising results on the smaller dataset with only 8 hours of training on similar hardware.

As for Tortoise, it was a mixed bag for me. Finetuning refused to deliver any results and the base model was able to produce promising results for some voices, but overly british accents or voices completely different from the source for others.

Overall I'd consider it a deadend, so I am happy research is going into other backends.

I'm no stranger to that, given how I'm pretty much fostering TorToiSe, whether I like it or not.

I think it's just chalked up to terrible defaults.

I should be fine after correcting these things. I imagine anyone that tried to use it fell into the nodev trap and assumed the defaults were sane (and desu I fell for it too, only because I made bad assumptions).

From my cursory test, I'd rather not try again:

Compared to the first implementation just dumping things similar to what DLAS does: bunch of files and parse the directory. Simple.

I could gut the newer implementation to have a simpler data loader, but I can't be assed.

About that. I got a similar error that I got with the newer implementation when trying to train with the first one (some assert and a CUBLAS enum thrown), but I tried again yesterday after doing some other things (I think using torch2.1.0 nightly worked), and from there I'm smooth sailing on a paperspace instance.

Although, the newer implementation refused to work on my 2x6800XT system somewhere along the pipeline (I already forgot what segfaults), while the first one did, so even if the newer implementation is favorable, if I can't train locally, I can't back it.

And desu, the first implementation using DeepSpeed feels like it'll mature over time by itself with any changes to DeepSpeed, while the newer one is up to the owner. Although, the newer implementation does leave room for me to work my magic and inject BitsAndBytes. DeepSpeed allegedly has int8 quantizing, but I can't seem to find how to use it, if it's even for training.

Ironically, I've only been getting decent voice-finetune results on my 2x6800XTs. I'm not sure if it's some inherent nature about multi-GPUs, or something between ROCm and CUDA, but whatever placebo it is, any of my future finetunes will have to be done on those and not a paperspace instance.

Yeah, the base model is too inconsistent for zero-shot. A specific subset of male voices will work fine, but everything else won't.

I just hope I can get results with VALL-E. I can sort of understand a lack of a generalized model, but I feel I'm once again the only shot at getting something cobbled together,

I crammed BitsAndBytes into the first implementation using a similar "injection" I did with DirectML jerryrigging for CPU-only functions. In hindsight, I could have also used this method with DLAS, but oh well.

desu the gains aren't as large as adding it to DLAS, as I'm only able to slightly bump up my batch size from 16 to 18 before it gets unstable and occasionally OOMs on an A6000 (48GiB VRAM). I'm not sure why it spikes 4GiB of VRAM occasionally, or when it tries to save.

I can make some guesses as why it's not a huge improvement, but oh well. Bit of a bummer it didn't drastically let me cram a larger batch size in.

Got training to work on my 2x6800XTs with the newer implementation, and I'm a bit skeptical.

I think I got my ducks in a row with the first implementation (these were at 10000 steps, dataset size 55, batch size 1, defaults for all the other knobs, trained on my 2x6800XTs for I think an hour? an epoch can be ripped through in about 10 seconds):

I realized a few things:

It just seems odd though, there's definitely something off, especially:

I'll just have to wait and see how things shape up with baking the model. If it turns out decent, great. I'll be comfortable with renting out a GPU to do bigger training on (or cave and buy a 4090, as the prospect of renting for pennies sounds worse than just splurging $1500 on another GPU).

There are cheaper ways to get 24GB of VRAM

VRAM isn't my concern. In fact, I found both VALL-E implementations to be poor when it comes to VRAM. The one I'm backing just scales horribly (between an A6000 and an A100-80G, I could barely bump up the batch size), and the newer one never wanted to use more than 12GiB as it decides what batch size it wants.

I already have a collective 32GiB with my 2x6800XTs, so VRAM is most definitely not an issue. In the context of VRAM, a 4090 is a downgrade in capacity, and most definitely a Pascal card is a downgrade across the board.

It's just an idea to float about getting an actual card for ML for improved throughput if I'm going even more balls deep into it, rather than reusing cards I incidentally have that incidentally do okay. P*p*rsp*ce took a massive dump on me this morning, so I'm skeptical of using it (or any rentals) any more after being burned again.

Anyways, at step 27000 (after switching to bs=16, ga=2), the NAR sounds nearly the same as the reference: https://vocaroo.com/1jaGF5sduPQH. There's a bit of warble still, but I'm impressed. The AR still sounds iffy.

Step 40000 and the AR finally sounds better: https://vocaroo.com/18egrMwF6W4w

Still terrible, but it at least has audible speech.

@mrq what dataset you use currently. I can try on my system to double check too if it helps.

Some ten hours of some LibriTTS labeled LibriSpeech-Finetuning that I nabbed off some P*p*rsp*c* article about VALL-E, except it includes everything in the archive and not the 9h subset. The link to I itself is under my VALL-E fork repo in

./scripts/prepare_librispeech.sh.If you got a few days to kill, go right ahead. I have a small repo on HF with the data already quantized and phonemized to avoid going through the hoops of my rather-fragmented preparation process.

With your current working directory set to your

ai-voice-cloningfolder:source ./venv/bin/activategit clone https://git.ecker.tech/mrq/vall-e ./modules/vall-e/pip3 install -e ./modules/vall-e/git clone https://huggingface.co/datasets/ecker/libritts-small ./training/libritts-small/./training/libritts-small/config.yamlto your likingexport CUDA_HOME=PATH_TO_YOUR_CUDA/usr/local/cuda-11.8/or something. For Docker images with CUDA-11.6, and you installcuda-nvcc-12.0or something, you'll need to point to the newer one.export ROC_HOME=PATH_TO_YOUR_ROCMbin/hipccinstead of/opt/rocm/deepspeed --module vall_e.train yaml='./training/libritts-small/config.yaml'I restarted training two nights ago and fiddled with some more settings yesterday, so progress restarted, as I didn't trust the initial dataset to be "right" in the sense of using the entire dataset, optimally.

I also manually validated if BitsAndBytes was even working (it's not).

torch.nn.Embedding, rather custom ones that inherittorch.nn.Module.torch.nn.Linearforbnb.nn.Linear8bitLtcauses errors (not surprising, since it's not integrated with DLAS, and naturally).So I'm stumped with BitsAndBytes. I can give it more cracks at it later today, but even DeepSpeed's weight quantization doesn't give consistent VRAM savings (sometimes my GPUs will sit at 12GiB and then it'll sit at 15).

I will admit I did cave and get a 4070Ti, as:

The only caveat is:

I'm stupid. To spare the gory details:

Although:

Hello, I don't know if it's of any concern, but someone on the newer repository uploaded a trained model as detailed in this thread: https://github.com/lifeiteng/vall-e/issues/58

I managed to download it and ran some of my own tests, which I wanted to share in case it's of any interest.

Solid Snake:

(Source) https://vocaroo.com/17lhindJicrD

(Result) https://vocaroo.com/1b3Cqih5Lgtb

Exdeath (FF5):

(Source) https://vocaroo.com/1fMlLIejplOt

(Result) https://vocaroo.com/1oKsiTHfuTPH

Jecht (FF10):

(Source) https://vocaroo.com/1jMNgguHCZE8

(Result) https://vocaroo.com/1zqjXWgg7Fzs

Vile (Megaman X):

(Source) https://vocaroo.com/19KuGc5bMtd1

(Result) https://vocaroo.com/19J6GpGavMxy

From the first glance it seems to be running even slower than Tortoise.

Of my samples, only the Snake one seems to match the speaker's voice and even then he seems a bit too.. jolly?

The others don't fit the speaker's voice at all.

Sadly not the silver bullet I was hoping for, but I guess it all depends on what's in the model again.

Neato.

Yeesh. I'll be a pessimist and assume (cope) that a lot of that time seems to be just bruteforcing through unfavorable conditions with (most likely) zero optimizations:

I feel like it has a similar problem the first implementation has: they're made by grad students with lab rigs who only know ML and nothing else. Don't get me wrong, they know it better than me, but they're not pragmatic (for lack of a better term) about how they go about it. I just can't really place my trust in either implementations after seeing the warts.

Thanks, I can't be assed to try and pick apart how to use the newer implementation for a third time for cursory tests.

I'm a little impressed from its results, a very small little. The model itself definitely isn't a tortoise replacement, but it at least shows it can provide something. My only concern with how little actual moving parts are in it, there wouldn't really be any room for bandaids like for TorToiSe.

There's something off about it outside of the audio quality, wrong pitches, and I suppose the general tone. I can't quite put my finger on it. I wonder if it's an issue with how the phonemes are processed, as I think it's only using the base settings for phonemizer (no stress symbols, no spaces). It sort of sounds like what https://ipa-reader.xyz/ spits out.

Most definitely.

For zero-shot inferencing applications, diversity (ick) is a HUGE factor in having a good model. There's only so much data to sample from when trying to mimic voices. I worry that when I finally nail training a small model, that I'm going to be in a world of hurt trying to pick every piece of clean audio I can get and adequately processing it (although the web UI is rather decent at that now). The magic of TorToiSe is in the dataset it was trained against, as its author mentioned not using just audiobooks (a bit ironic, since it still has its biases on how well its zero shot inferencing performs).

I think the other issue is that, depending on how conforming that implementation is, the paper calls for using only three seconds of audio for zero-shot inferencing. I think I saw some commits about different "prefix" modes (I think an analog to how I was changing how I'm computing latents, and the 152334H fork having its different settings for it too), so it might do better with more than three seconds to play with.

However. TorToiSe has definitely shown that finetuning a model that's semi-semi-competent is viable. I could care less about a "10/10 amazeballs" model at zero-shot when you only really need a semi-semi-decent model to finetune from. That's more of what my goal is/should be: just get a good starting point so people can finetune off of it.

I suppose one last thing before I go back into my hole for another few days: training it is shaping up to be a real bitch and a half. I suppose it's only natural it is so, given it's a language model. I'm doing some sussy "warmup then decay with restarts" to quickly train it over painfully bruteforcing it with the LR decay that both the newer implementation used, and DLAS/TorToiSe does (for finetuning at least).

Rereading the paper trying to procrastinate sleeping, and there's some things I guess that would have been nice if it was disambiguated, rather than inferred from both implementations. The original VALL-E:

The training process seems fairly simple. It's just the first implementation does it the rather classical way of training by batches, but I'm not sure how much that'll be a problem. I worry I need to revamp the both the transcription process and the dataloader to replicate the original paper better.

Progress report for whoever cares about my descent:

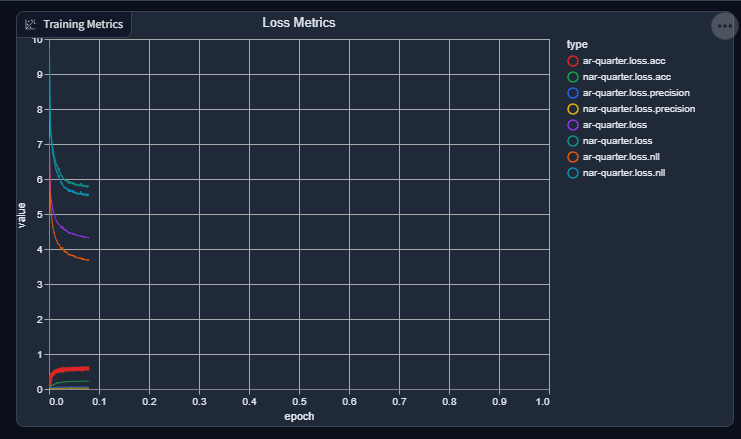

-quartertests; it's not worth the time testing a gimped model if I want to validate that I can even get decent results with this implementation.My thoughts and concerns:

Some whacky ideas I'll spitball that I won't probably do:

Roadmap / Goals:

I just hope that things are smooth sailing from here on out now and I can use the time waiting to train to finally relax.

The japanese tortoise model is really cool. Would VALL-E X provide better results?

Reading through the VALL-E X examples, it seems to be able to seamlessly switch between English and Mandarin, while preserving accents.

Does this mean that we could do something like train against Joe Rogan in only English, then have him speak in fluent Japanese?

Is the VALL-E implementation you are working on capable of Japanese?

Forgive me for butting in, but howcome you haven't worked on building a more varied dataset then? There's hundreds of hours of video game dialogue & podcasts available for you to build a more diverse dataset from, not to mention other varied audio datasets that could be included.

This issue reminds me of an LLM paper I had seen here https://arxiv.org/abs/2203.15556, that seems to coincide with the dataset claims tortoise makes, and your woes. I think it would be worthwhile to try scaling your dataset size instead of trying to scale your model size in it's place? I would test the theory myself but lack the hardware that would actually be suitable for training to test this theory so feel free to call me out on it.

The Mozilla Common Voice Dataset is over 3000 hours and CC licensed. Podcasts, which one might have to transcribe (or at least proofread) manually, aren't a wise use of limited developer time by comparison.

Hard to say.

I feel whatever base VALL-E puts out for Japanese is an indicator of how well VALL-E X will perform, as the only difference in implementation between the two would be annotating language during training, be it from additional tokens or another input. I'm not too sure how I would go about it, as there's no exisiting implementation for me to

leechdraw inspiration from.Very. I'm more impressed with the VALL-E X demos moreso than base VALL-E's demos.

mmm. Should be. I imagine for testing a VALL-E X implementation, I would source a Japanese speaker that sounds like him and train against both of them. The limitation is getting a soundalike.

The magic of VALL-E X is being able to sample speech against an acoustic prompt (voice latents, in TorToiSe terms) similar to your subject. That's sort of what LM-based voice cloning does. I imagine the secret fixin is just providing tokens for language (like a start/stop token, but start-English/stop-English start-Japanese/stop-Japanese), and the ability to have multi-lingual speech synthesis is just an emergent property of basing it on an LM, or some gobbeldygook.

Mhm, should be. The only hurdle is trying to mend the phonemizer to work on Japanese again, as I remember it breaking at 2AM and I couldn't be assed to bandaid the phonemizer.

I will, don't worry. I just need to babystep through this and not throw in too many variables. My crux with my tests before were not having a clear focus in how I should go about testing and experimenting.

I'll probably get to sourcing a master dataset while I'm training models for the final step. For now though, I need narrower datasets for tests to ensure things can in fact scale up with the implementation before sinking in so much time for a bunk model.

For zero-shot inferencing, of course a large/diverse dataset is necessary, but that won't do any good if you don't have a model big enough to learn all of it. I found the quarter sized one to cap out and lack the capacity to really learn anymore without a painfully astronomical training time to bruteforce it, if time would solve it.

That's definitely a worry I have that would "filter" a lot of people trying to roll out their own models. VRAM is no issue, as with enough optimizations, I can have a full sized AR and NAR and wiggle room on 12GiB, but the crux is a tradeoff between compute time and compute throughput; I can have all the speediest VRAM in the world, but it's no good if I can't use it fast enough. I have relatively the same throughput at bs=4 as I do bs=8 anyways.

And you can only really get good compute with Ada cards (4070Ti and up) or multiple Ampere cards. There's always """r*nting""", but it's not a good value proposition, at all, especially for testing.

How convenient. I want to believe, for zero-shot inferencing, more speakers is better than more hours, so this is probably a great way to get varied voices.

I feel any dataset is going to have the same amount of time to transcribe and validate desu. I can't really re-use existing transcriptions, as:

It pretty much took 5 hours last night to re-transcribe LJSpeech in WhisperX large-v2, and probably an extra two this morning to quantize and babysit the phonemizing process (for a valid but still God forsaken reason, phonemizer will make a temp copy of the espeak lib on every call and only cleans it up when the process closes, so it'll crash after X amount of phonemizings). I suppose I could get a better transcription process, but WhisperX is probably the best I'll get.

I've had outstanding results with WhisperX once I started running it with

--align_model WAV2VEC2_ASR_LARGE_LV60K_960H. The downside is that it doesn't support many languages out of the box (but Japanese is one of them, IIRC).However, I don't think you need to bother with that though because in the sample I downloaded it's already segmented. I grabbed the latest delta of the Indonesian corpus (only 110 MB) and the longest clip is only 10 seconds.

I mean if that's the case I'll have a second 3090 with NVLINK sometime next month, so maybe that'll make the difference.

In regards to that paper, it basically showed that most LLMs where underfitted , the cure was more data and training st the same model size. It's probably going to be a necessity given the results, so maybe it's going to be more beneficial optimising the training itself before devoting to the training.

Yeah, I have that model load for English only just so I can try and get something together when reintegrating back to the web UI. I should make it another option, but I can't be assed to at the moment.

It's enough of an improvement getting everything together + VAD filtering that I can finally rely on it for transcription after being scarred.

A single 3090 allegedly has similar throughput to a 4070Ti, but I haven't validated it myself after all the optimizations I cobbled together. It just feels like I'm having nicer throughput with my 4070Ti over using a P*p*rsp*c*e A100-80G before they fucked me in the ass again.

Ah, I guess that would explain some things. Pretty much most of my tests just seemed like in the worst spots where it's too big to overtrain and get results like the Joe Rogan tests, but nowhere near large enough to get it to not be so grossly underfitting. The LJSpeech one seems to be going a little better than the LibriWhatever subset I had over the weekend, but doesn't seem to be improving any more.

If the valley between the "too little and you overtrain" and the "not enough and you underfit" is just that large, I suppose I'll start working towards getting a ginormous dataset together.

I'm pretty much all out of my magic tricks to make training better.

Well, there's reusing/sharing the text / acoustic prompts embeddings between the AR and NAR, but that doesn't seem like it's much of an issue for training.

What would such a dataset entail?

If you are not to shy away from using materials with legally uncertain licensing issues, then

I'd happily contribute my growing collection of video game character samples (extracted straight from game files) + transcriptions.

Not too sure. It'd probably be a mix between:

My only qualm with using sources from the collections on the wiki is that almost all the voices there are one single file, so transcription/segmenting will be a bit of a pain.

Of course not, I'm fine using whatever. It's just slightly more convenient to use "open" speech corpora as they're usually cleaned up well enough.

Sure.

I'm so mad. I had a decently lengthed followup, but because I used a stupid emoji that the text entry field suggested, it ate it all up.

Pah, oh well. It was mostly outlining a path I should take by using actual audio from vidya rather than audiobooks as audiobooks have the inherent problem of not being varied enough.

Vidya also has the added benefit of coming already segmented in small 2-5 second chunks, as well as being clean studio recordings.

Plus, a lot of them can be easily extracted from game files as well.

Progress report:

Inferencing with VALL-E is integrated into the web UI, with some warts.

Training wise, I found it's "relatively" """easy""" to """""add""""" additional voices with an existing dataset on an existing model, and I imagine finetuning a single voice might work nicer. I'm doing some funny shenanigans by quickly warming up the LR to 2.0e-4 for an epoch's worth, then decaying down to 1.0e-6 for 1000 iterations (which in my last set it seemed to be for 9 epochs).

I might need to continue the above procedure by adding in more and more voices to the dataset to find a point where it'll stop overfitting. I'm just worried how many voices I'll need, and I worry about risking frying everything by doing these pseudo-restarts too much.

Aside from that, there's not really much else for me to do besides re-read the VALL-E paper / both implementations while I keep baking models and shoving in more voices, since there's some things I just don't quite "get".

Oh well. I shouldn't sweat over the issues so much; as long as the evaluation/validation output sounds fine during training, then I can just keep training a model and eventually resolve any issues with inferencing. It'd be nice if I had someone to come swoop in and call me a dumbass and other quasi-derogatory-slurs for neglecting details, but I imagine there's no one else that really has an idea about VALL-E outside of the people behind the M$-backed paper, and the two implementation writers, all of which seems to be behind a prohibitive language barrier (or in the case of the one I forked, unresponsive). I'm in no rush to nail out a working model, after all.

I imagine my issues, once again, stem from an extremely small dataset size. As mentioned:

And the paper mentions it several times:

I suppose the strength of VALL-E is that given an extremely large dataset (60k hours, 7000 speakers), the LM properties it boasts over other TTS systems emerge. So I guess I'll just keep shoving in more and more and more and more and more data.

I'll need to come up with a way to try and crowdsource a bunch of data then. Even trying to come up with what vidya to source my voices from is leaving me feeling overwhelmed already (both from even trying to think of where to pool from, and storing it all).

Bear in mind, I am merely a weeb with no knowledge whatsoever...

With that being said...

tldr You should enforce high example standards, and pool the efforts of the community/other people, and use anime transcriptions instead of/in addition to vidya

To preface, based on reading your commit descriptions, and paying attention to your mannerisms, I assume you don't give a fuck about morality. So i have taken that into consideration when writing...

One of the biggest things, is that you have to leverage yourself correctly

People like Roukanken can immensely help you, if you let them

There are many people who would love to contribute to this project, but who are unable to from the coding side of things...

And lets be honest, its definitely not worth your time to collect/process data, as opposed to developing the code...

But there is a way to utilize this manpower effectively...

You need a way to publicly delegate tasks, and collect the data in an efficient matter...

We could create a google form for something like this...

It would be a public form, that anyone can sign up for, and you can delegate certain materials to different people, with varying metrics(ie I need someone to get these files, I need someone to transcribe this files, I need someone to split these files, I need someone to clean these files, or you can simply assign the whole request to one person)

The biggest thing that would be needed for this to work effectively is...

There are more complexities as well, such as managing voices per character, background music/noise, but as far as scale, anime may have you covered.

I am unsure of the best way to store the files but once something is completed, it is proabably best for you to simply download the files, then be done with it(after all, there really is no need for anyone to have the final,perfect cut files aside from you, especially if they meet quality standards)...

Advantages to using this method

Disadvantages...

To mitigate some of the disadvantages, you could "assign" someone to help with this, ie it would be easier to train one to three people to understand what you need in an audio dataset, then have them actually verify the incoming data/audio for you,then simply trust their judgement, as opposed

to you manually verifying each dataset. That can significantly free up your time investment...

I am unsure of your current process of getting data ( I know that you use certain game rips and libraries),

but for fueling a massive, unethical dataset, desu I think this is the way...

The only thing, is that there is no pre-existing "anime library", so in a lot of ways, this would be the first of its kind...

If there is an existing library, ie where people have ripped audio VA from characters and transcribed them, it would be far easier, but to my knowledge this does not exist.

Where do we get the material?

Fortunately, anime is very easy to download out in the wild

Various websites offer download utilities, and there are some that allow for downloading anime in bulk...

However there is still problem... how to prep the data?

Transcriptions and community service

There are various "transcriptions"/anime fan transcriptions, as well as subtitles for various animes...

These files provide the ENTIRE dialouge for a given episode of an anime, with both English and Japanese transcriptions. This means the accuracy is pretty good (provided the authors were accurate initially. For Japanese I believe your software uses Hirigana/Katakana? That would be one issue, but I am assuming we could just throw the transcription into one of those online websites that would simplify the kanji into hiragana/katakana)

But...

How to split?

This process would be a lot of work for one person. Unless there is a superior method.

This is where a little investment would be needed to create a proper tutorial...

Essentially, we could teach community members, and incoming members how to contribute to this project by...

Generally, most of this can be done through audacity

(If I am being honest, there is probably a way to automate the "cleaning" aspect of audacity, ex. autorun a script that will take a batch of files, and apply a (NoiseRemoval>compressor>Equalizer))

You will need to invest a little bit of time into making the process as straight forward as possible, and being CLEAR as to what you need, and do not need, but it would get you brand new, high quality audio files

You will proabably have to "inspect" the audio somewhat, but that's what you can train someone for....

Part of me honestly wonders if there would be some way to match the transcriptions to the audio, then split it automatically. In theory, if you could do this, this would actually reduce the need for community involvement,

because it would be as simple as getting the transcript, audio, splitting, cleaning, then using. You could basically create a one click system, that gives you the data you need (albeit, with some light inspection)

However, my concern is that some transcripts do not include timestamps, making this somewhat difficult... maybe someone has a creative solution?

Where to store?

Harddrives? cloud services? Exactly how much space you need? Im sure people would be willing to pool some shit together for you...

So to recap

I would say in terms of collecting data in this fashion, you would have to shift to being a little more of a manager and a coder, as opposed to straight doing everything yourself, but fuck it...

There are also other ways to get audio that is HIGH QUALITY, PROPERLY TRANSCRIBED, but that is less "morally acceptable", but if you would like to talk on these, you can hit me back...

Lmk what you think...

I'm willing to help out a bit more if you are interested...

Almost all of what you've proposed above can be done automatically.

whisperxproduces millisecond-granular timestamps per word (in ASS format, like Anime subbers use), those timestamps can then be fed intoffmpegto produce segments of the appropriate length. Cross-check against fansubs (or the official ones, if available) and throw out any that don't match.I wasn't all that familiar with Whisper, but it does seem quite awesome.

I guess at that point, if what you say can produce the segments, then all we would need to do is feed him the data/anime?

Can WhisperX detect differences in speakers/be able to "sort" multiple speakers? i.e. for a full anime episode, multiple characters.

There are proabably more efficient ways to clean the data, as well, I presume.

I'm not even sure if I need high standards. WhisperX does a damn decent job now at transcription and timestamping, and the VALL-E paper says just having a ginormous dataset is robust enough to noisy audio and some inaccuracies. Sure, it'd be preferable to have the data as accurate as possible, but it doesn't need to be 99.99999% accurate.

desu I just need ideas on what to source from (and a bit of where, as sounds-resource.com seems to be missing a decent amount of stuff that crossed my mind). Sure, it'd be nice if it was all handed to me transcribed and neatly prepared, but asking for it to be transcribed is a bit of a big ask, as I'd pretty much require only transcriptions from WhisperX + large-v2 + VAD filtering enabled, which requires a HF token. It's not a huge deal for me to do the transcription process itself, as a few hundred lines can be crunched through relatively fast on my 4070Ti.

My qualm with anime (dubs) is that there's a considerable amount of extra effort needed to get decent audio. I imagine the best case scenario are BD releases with the vocals on a separate audio track, and you can just segment the audio by subtitles and it's all good, but the worst case is aired anime won't have that. I also don't think any of the few anime I have watched were dubbed anyways, so I won't have much of anything to source from myself.

In terms of """ethically sourcing""" a dataset, I don't really have an qualms about that.

The only thing left really code-wise is just to make my own VALL-E implementation rather than rely on an existing one and continue working around its design desicions, but even then that's pretty low priority.

Pretty much what I had in mind. I'd settle just with something to submit character name + source and a link to the audio (or at the very least, where to get it).

Actually, the final, quantized audio that gets trained against doesn't take all that much space, so something ginormous won't actually be all that much of a detriment. It's just the source audio that becomes a bit of a pickle if I keep it on disk. Worst case, I do have several, several drives (and could always buy another 10TiB one), but I'd just have to do bookkeeping as I'm quite a datawhore.

desu my concern over VALL-E X is quite a ways off (or at the least, even having a Japanese model). Incorporating Japanese would have to be when I do get something cobbled together, as I'm really not sure how much of a problem it would pose with training with a multi-lingual dataset, as much as it would definitely help increase my voice variety with including my sixty-or-so voices I already have transcribed.

From here I'll just generally address the rest of it.

I appreciate the thought-out planning on it all, but at the end of the day, as long as the samples are somewhat-put-together, I'll accept it: anime, TV shows, movies, what-have-you. Just anything that isn't an audiobook reading, as that's where I feel is the less likely to provide much of any variety. I'm not strictly restricting it to just muh vidya for the dataset; it's just both the best and easiest to source from, and what I'm 99% likely to source from myself.

On the flipside though:

For now though, anyone's free to drop a link to what they would like for me to train the model against. Between my usual weekend rituals and RE4make, I'll probably try and cobble together more voices to feed the training model, as I fed it the rest of what I had on my training server and it seems to already have peaked, given the little improvement from doing another LR restart.

Yeah. I pretty much just need the audio, and WhisperX / the transcription part of the web UI will handle the rest.

With diarization, yeah. It's not something I've tested, but the web UI's integration with WhisperX can use it, although I'll need to uncomment one line.

Hey @mrq have you seen this one? Thoughts?

https://github.com/svc-develop-team/so-vits-svc

Unless I'm misunderstanding it:

I suppose it can fill a specific niche, but those two things for VITS (whatever they're classified as) are kinda not in my scope. Even if not for those things, I wouldn't really dip my toes into it since it seems it has its own hands working on it. I only really dipped my toes into TorToiSe (and by extension this VALL-E implementation) it was pretty much abandoned (at least, at the time, I don't remember the timeframe between me slapping a web UI and finding out about the 152334H

fork) but with lots of room for improvement.

Also I don't think I can have another ecosystem under my belt.

On the other side of things, the gravity of how much data I'm going to need to feed this beast is starting to weigh down on me more. I greatly increased both the speaker count and lines being fed (I think I'm up to 51 speakers, 18484 lines, not sure about total duration) and, while I'm not so concerned about the throughput rate in terms of the entire epoch, it only seems to amount a minor amount of an increase in output quality.

The AR's evaluation output is sounding better, but the validation output is only really sounding somewhat passable for English with the old-ish (as of a couple of days ago) voices, before I threw in the rest of Persona 3's voice lines into it. The NAR sounds better as usual, but there's no point in the NAR being good if the AR it gets fed isn't up to par.

I guess I'll just keep feeding the beast more data to train against. I'll let the Persona 4 lines (non Golden, unfortunately) bake for a day or two before throwing in more voices.

I could give up and feed it a LibreWhatever dataset, but I really don't want to feed it audiobook readings; I'm already getting better results it feels by feeding it muh vidya audio.

If you don't mind sharing your collection @Roukanken (or anyone I suppose), I'll be happy to nab it and dump it into the hungering beast. The audio itself is fine, as I'll only really be comfortable with the transcription if it was ran through WhisperX large-v2 + the VAD filter (as I haven't tested the segmentation quality on anything else).

So, I was letting the FUD get to me about whether or not I should have backed the newer implementation instead. I was getting the model code transplanted into my fork, and as I was stitching up the dataloader to the forward pass, I realized something.

The first implementation (enhuiz) will:

In hindsight, it makes sense, as it'll train in a way that reflects the way it's inferencing. This should have great zero-shot capabilities, but at the "cost" of it being stubborn to train, and terrible to try and have as non-zero-shot TTS systems (like traditional TTS).

The newer implementation (lifeiteng), doesn't seem to do that. It'll pull "pre-computed features" (I imagine it's just the quantized audio but abstracted through Lhotse's API), and will use the "input prompt" as the target. Contrary to the above, it's quicker to train, but harms zero-shot-ability, as it's not reflective of how it's inferenced against. It's not truly leveraging the capabilities of an LM.

However, I can't really knock it for that, as it at least has a "working" (albeit, not up to par) model, while the first implementation doesn't seem to have one yet.

However, one flaw is that I'm required to keep similar voices together, and not mix speakers within a folder. It's being a pain since a lot of more voices I'm sourcing from are all one incestuous mess of uncategorized filenames (pretty much everything I either have to rip myself or I've found ripped already; I only got lucky with being able to categorize the Persona 3 and 4 voice files).

So what are the guidelines?

How many segments minimum?

Ideal clip length?

Just English?

Any particular way you want it labeled?

Also, does this mean you are including another implementation, or are you sticking with the one you are currently using?

@mrq

Not sure; I still have about 20 voices that have sub-50 lines that I'm not too sure how much would help shape things, but I imagine at least 10 lines would be fine.

Ideal would be between 3 and 12 seconds, but whatever the transcription tab the web UI will spit out seems decent enough. The paper mentions 10 to 20 seconds, but it's better to not have audio lengths too long.

Mhm. I'm worried adding Japanese voices might be too much right now. Phonetically it should be fine, but I need to wait for this next batch of voices to cook before trying it.

Nothing in particular. One big folder of all of a character's dialogue is good enough, and I'll just feed it through WhisperX to transcribe and timestamp it adequately.

It would be a big help when I further scale up the dataset again. As of now I've fed it:

I also have Demon's Souls, Elden Ring, Tales of Symphonia (and I need to extract Vesperia skits), and FFX, but they're all uncategorized so I can't really do anything with them outside of some clever tricks with modifying the dataloader process.

Here are three links that I think would be a good fit.

https://www.youtube.com/watch?v=XkMoingR1p0 - JFK

https://www.youtube.com/watch?v=hzW-h_Rm8Jo - Dempsey, really good emotion

https://www.youtube.com/watch?v=1S48jHXh44U - Misty, female variant

In general, Call of Duty has really good voice acting for the zombies portion, and all their characters have 10-20 minutes blocks of audio, all of which is clean.

Between all the games, there is proabably a large amount of characters we could use.

Would you like me to download and link these for you? Or can you do it on your end? (These are larger chunks of just one character each, so I figure it be easier enough to just run through whisper?)

Also...

I can rip it with yt-dlp and transcribe from there. I'll add them into a next batch after this one gets chewed through (at this rate, I think another few days).

No preferences.

If it were on rather monotonous audiobooks, I would probably try and have "uniformity", but because it's on real-er data, I don't think I should have uniformity like specific accents.

Have you checked out the Pony Preservation Project Datasets You can found them here:

https://mega.nz/folder/jkwimSTa#_xk0VnR30C8Ljsy4RCGSig/folder/OloAmDqZ

and here (These are non-MLP datasets):

https://docs.google.com/document/d/1y1pfS0LCrwbbvxdn3ZksH25BKaf0LaO13uYppxIQnac/edit#heading=h.6jgcpmrwa3fq

all of them are already filtered, organized, cut, and transcribed for you, so that could hopefully make it easier for you.

Oh right, I forgot I can leverage /mlp/'s autism. I'll nab them too for the next feeding time as well. I'm sure they'd also appreciate if I did train it on their technicolor horses.

I was going to say it's a bit of a shame that most of it is already Persona 4, but if it's Golden, then that's golden. It does have S. Links too, which my rips from sounds-resource doesn't have, so I might as well grab those regardless and replace what I have.

Sugoi. My only worry is if a cut might be too long and either get culled anyways or will cause OOMs when the stars align. However, I might be able to remedy this with having my fork conform to the paper better (have the input audio prompt trimmed to 3 seconds; it might also have a training throughput at a cost of not being able to having as-strong variable length input prompts)

I swear every time I grow a wild hair and dive back into the implementation I forked, there's another twist with how it behaves.

To be brief, I was trying to both have a way to calculate duration from the encoded/quantized audio and then see about trimming the quantized audio down to 3 seconds to feed for training (to see how much used VRAM I can reduce and how much of a throughput increase I can get).

Turns out, that not only does the implementation randomly selects a different utterance to use as the input prompt, by default, it can use up to three random utterances and combine them. I say up to, because it does a probability check to see if it should continue. This most definitely will explain the wild variation in VRAM use between steps, so I should be able to make this a more sensible amount.

I'm pretty sure this is overkill, but in theory it should help with try and dissociate input length from inference quality, but at the same time, I think it'd be much, much better to just have it poll one utterance.

Enforcing a maximum of 3 seconds for training has let me set a batch size of 4 to a batch size of 16, for the same overall iteration rate (so I effectively 4x'd my training now, I guess I've been bottlenecking my 4070Ti). I think I'll just keep it that way.

Yeah the one on the doc are the golden version! At least according to the anon who ripped them (I didn't check it myself)...

Can whisper run through a batch of single files with the same level of convenience? There are some clips I have where it is like 40 .mp3 files, all unlabeled, but for the same character. I figure I would just stitch them together into 1 file anyways, but I am just curious.

Kind of. You can specify multiple files when you run it, ex:

whisperx --model large ---task transcribe --language en file1.wav file2.wav file3.wav ...Yeah, they're from Golden. I tried adding in the Chie lines the other day (since I will admit the Golden VA grew on me despite initially preferring the original Chie for some time) and I couldn't for the life of me get it processed through WhisperX; it would kill the entire process when it got passed the first three or so lines. I tried remuxing it in ffmpeg but no luck. Oh well. I was only doing that since I had to revert from a checkpoint the other day, as I completely botched something when I was moving my data around (more on that specifically later).

Yeah. My naive way about it is to just throw it all into one audio file (I can't recall if I mentioned the steps to do it with

AudacityTenacity on the wiki somewhere, but that's what I would do to stitch them into one audio file), as I have some voices that are unfortunately one audio file. That approach seems to work almost just as fine as having audio separated for a single voice.I imagine it might get me out of a pickle with completely unlabeled multi-speaker rips with diarization, but I haven't bothered trying it yet.

It seems to be effectively the same as programmatically doing it through the web UI (in the sense the models are still loaded during each iteration).

I think technically you might be able to get better throughput if you process one mono-file instead of separated files if you have VAD filtering enabled, as the VAD filter "pipeline" allows for batching to increase throughput, and larger audios can be batched "better" (I don't think there's much of a throughput uplift if I have larger batch sizes set for sub-30 second segments).

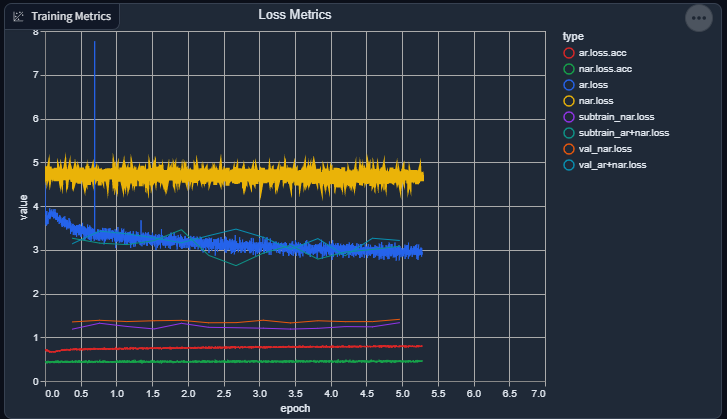

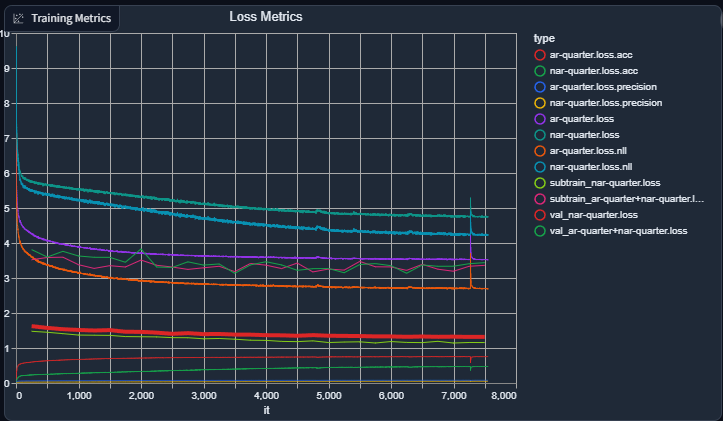

Anywho, I'm blasting ropes from how training is shaping up now. It was a really rocky start, but it seems to be smooth sailing now, as I'm getting actual clean real output now from utilizing both the AR and NAR to produce output, rather than playing by ear output from each model separately.

After my serendipitous sniffing the other day though the implementation I forked, I:

./training/{voice}/valle/=>./training/valle/data/{voice}/), and because the speaker name getter lambda was fetching the 2nd-to-last folder name instead of the last folder name, all lines were treated as the same speaker (data), effectively making the input prompt random data. After fixing my issue, reverting, and the above throughput increasesI was able to squeeze out some more "optimizations" to increase my batch size from 4 to 16 while having an even faster iteration rate (bs=4 yielded an average of 1.4s/it rate, while bs=16 and disabling GC per iteration yields an average of 1.04s/it). I was wrong to assume my 4070Ti was not bottlenecked and that batch size wouldn't starve it of throughput. Unfortunately, I should have gotten a 4080 instead for more VRAM, despite it being the worst Ada card at the time (at the time, because everything 4070 and below is just as bad).

Additionally, I realized I can also test actual inferencing during evaluation (RVQ layer 1 of the AR, RVQ layers 2 through 8 through the NAR), and hoo boy, it's actually decent output, unlike the monstrosity of my initial inference test (for some reason my evaluation/validation datasets are either Westwood Blade Runner or Kingdom Hearts):

I picked the ones with noticeable flaws in them so they're more apparent they're not just the reference clip. There's still a sizeable amount of the evaluation output that doesn't sound quite right, and the AR+NAR validation output is pretty rough.

It's extremely relieving to hear that it actually can work, and it's probably just the provided inference method being a bit sussy. It's also relieving that I don't need to keep shoveling more, and more, and more data, but I might as well keep doing it, as it still has issues fitting just right for outside data, at least, given the validation output.

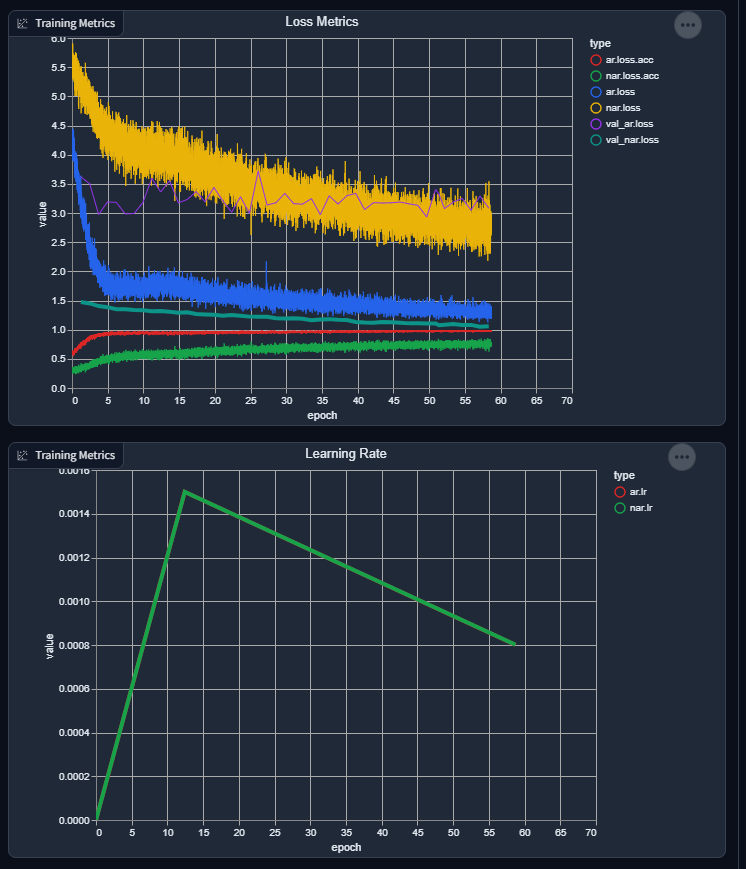

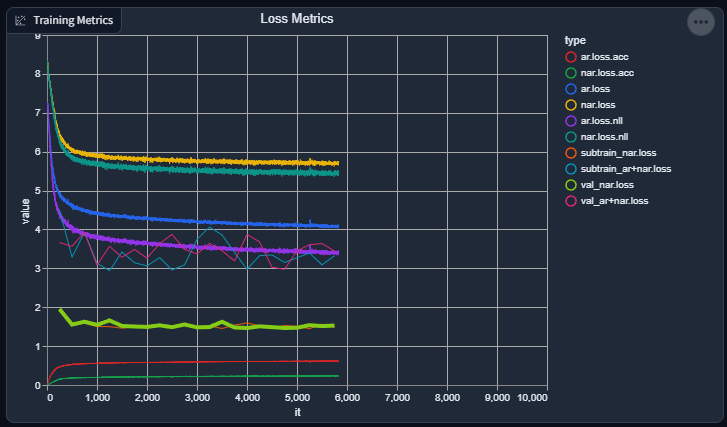

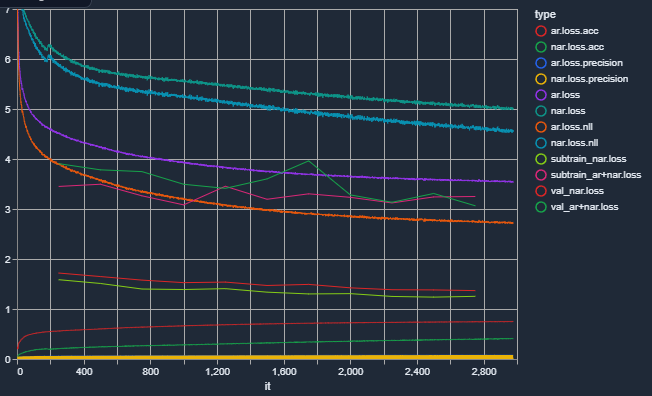

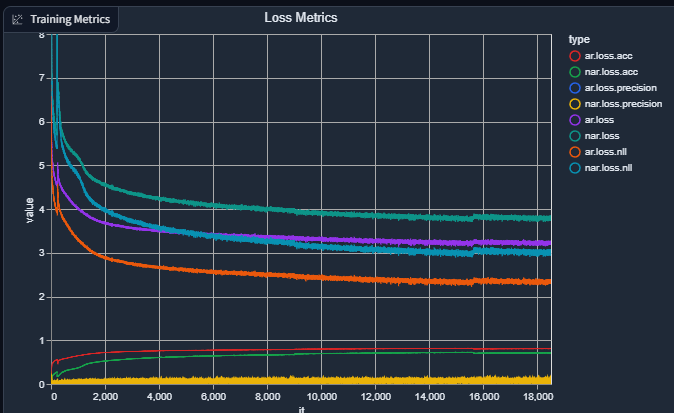

And my current graph (epoch count is related to the current dataset, I usually will do a soft-reset by loading the weights and not the optimizer state when I change the dataset):

I haven't added reporting the loss for the AR+NAR yet to the graph (it should be simple), as it's a recent addition so it wouldn't thoroughly be reflected in the graph yet.

I still have a lot more baking to do for it to be "just right", but for it to give quasi-decent output now gives me hope instead of FUD with it being a fools errand.

Thats awesome to hear.

So do you still want data? I've got some awesome clips lined up. Both Japanese and English.

Are we still at this stage?

How much more improvement do you think you can get out of the VALL-E implementation? Do you think it surpasses/will surpass your tortoise model? Also, VALL-Ex?

So whats the process looking like now? Is it just to keep training it and adding more voices until its perfect?

mmm

It's hard to say still. I think my new-er understanding of how the implementation works, and how VALL-E itself works, wants to say it should be pretty resistant to any cross-lingual tainting, but I'm having a really hard time trying to express how it might be. I guess it's just better to try it and see how it behaves.

I'm not sure if I should aim for it now, since I know the implementation is perfectly fine and works (albeit the dedicated inference routine seemed a bit flawed, but that can be easily remedied). Outside of cross-lingual/VALL-E X, the last thing on the metaphysical list is getting a decent pre-trained model together.

But, if I'm doing that, I might as well get it baking on Japanese too. If I'm lucky, there could be some crossover with either languages bolstering the other up in training.

Performance wise, I'm very sure this time I'm all tapped out on how much I can squeeze, outside of playing with fire and trying 4-bit training (I worry about accuracy issues at that much quantizing). I genuinely can't think of any other avenues for improvement.

Output quality wise, definitely can get more improvement, but it's a matter of how long it will take for it to get there. My training method is still pretty naive and flawed, so I can always refine that aspect.

Quality of life wise, definitely more room for improvement. I'd like to slot in explicitily providing a validation dataset (easy, but it's low priority), and there's probably some other things to muck around with but I can't quite recall.

I think in terms of:

it definitely can outperform TorToiSe. It just is pretty much now up to how the model itself is trained.

In terms of subjugating TorToiSe to try and have a cross-lingual model, definitely. Non-English TorToiSe will always be restricted by both its tokenizer and the CLVP/CVVP.

Mhm.

The beast must be fed until it starts exhibiting decent zero-shot capabilities (through decent validation output).

Are there's still any advantages to tortoise after playing around with VALL-E?

@mrq Feeding the beast. Here is batch 1 of some stuff I've been trying collect. This batch has 10-20 hrs.

The only problems...

But overall, these seem pretty clean. Let me know if they would work for you, and if there is anything I can do to prepare future lists better.

I'll probe through them whenever I get the chance next. I incidentally worked on the dataset preparation process to be more cleaner and not a pain (stuff like fixing the phonemizer memory leak, batch processing all voices, allowing subdir voices, etc.).

Sadly, I may have had some flawed reference audio, as it seems I've trimmed them a little too tight at the end. I've been noticing whatever evaluation output makes it out that it ends a bit too abrupt at the end, so I had to reslice and reencode all my audio again with a trim end offset of 0.2s instead of 0.05, for safety.

I'm doing another "restart" with resetting the LR and iteration count so it goes through another LR cycle just to jostle up the weights again. I noticed a lot of the older data doesn't sound too great (P3 mostly is what I've been catching), while the newer audio (some Westwood Blade Runner, Kingdom Hearts, a few P4) will sound pretty decent. I'm not too sure why there's the disparity.

Not much of a progress report, since it still boils down to how much time I'm putting into baking the model. I've been wanting to at least release the model currently, but there's no point when it's doodoo asscheeks for zero-shot AND still a good majority of the voices it's training on; the validation output is still penis, and I'm very, very sure whatever validation output that does sound great was secretly trained against previously, as the dataset sets aside 5% of a speaker aside for validation (which depends on shuffling the list with a 0-seed for each voice, so it could very well change every time I'm recreating datasets).

I lied, I added more data, namely the CoD lines and the English lines from those BNHA/MHOJ2 YouTube voice clip compilations linked earlier, and a few other personal add-ins from YouTube voice line compilations to further put my trust in how it works. It seems decent, so I suppose if you can't be assed to source the raw audio files, but it exists as one conglomerate, feel free to share that.

I'm glad those worked. There are a bunch of similar games that would have really good sources as well...

Fighter Z

dragon ball tenkaichi

Attack on Titan (1 and 2)

Naruto

DB kakarot

Scarlet Nexus

One piece

Demon Slayer

Just to name a few. (I picked these, because they have a japanese component, for if and when you start adding those.)

? Are you doing a reset?

How big is the model?

Also, I noticed that GIT was down for a moment this morning. Made me realize, there is no apparent way to contact you if shit went south? Do you happen to have some type of link, fan email, or alternative in case of?

@mrq

Most importantly, where are you at with data? I realized that of the links I provided you, it only in total amounted to about 5 hrs, which, if we need massive amounts, is pratically nothing. Do you have any goals for how much data you want ie a tracker of sorts? Maybe make an issue?

Also, in relation to this problem, have you heard of Spleeter, or relevant software? Basically, it seperates vocals from an audio track. Based on Hgt1778's suggestion, I was wondering if we could take anime, and run it through something like Spleeter, to then have clean audio. I figure that this might help fill the data void? I am playing around with software at the moment, and will let you know how well it works. The only flaws I see are that the voices for a particular anime would have to be "sorted" out, but I believe whisper can do?

Anyways, good shit. I've got more voicelines on the way.

https://vocaroo.com/19wD5o3Lvsz4 - Original

https://vocaroo.com/14xGpSo2ivbr - Vocals separated

For very little finetuning, it is actually VERY impressive. Do you think this would work? (It would allow you to utilize not only any anime, but even beyond that, any show...

This was run using Spleeter

An LR/optimizer reset just discards the metadata used for training while retaining the actual model. This way, the iteration count is reset to zero, and the LR schedule restarts from the beginning, hence the LR restart.

LR restarts help jostle any stuck weights that may not get resolved from just bruteforce training with really low LR rates, especially when adding in more data to a model that cannot yet generalize.

Can't really check, since the model + optimizer states from DeepSpeed are bfloat16 as well, and not the default fp32 weights typical with a torch model. They're 2.2GiB each for the NAR and the AR, but I think exporting them will get it down to 500MiB each? I can't remember.

There's a weird issue that seems to come here and there where either Gitea itself, or the VM that Gitea is running in, will shit the bed. It's such a niche thing that I can't really diagnose, but restarting the VM fixes it.

64138 samples, 250 speakers, 139865 seconds of audio.

I think in a very, very, very narrow pinch, it's fine, but training is very sensitive to any and all audio quirks in a speaker's voice. I imagine if the quirks itself are isolated to a specific voice it wouldn't be too big of a deal, but if those quirks are present in a significant portion of the dataset, then it more than likely will taint the model in some shape or form.

Maybe when the dataset itself is larger I can consider it, as there'd be less fear of it muddying things up, but it should be used sparingly for now.

Right now though, I'm just letting the model bake again with the new data, and seeing if the older portions of the dataset catches up finally.

I'm biting the bullet and dumping in LibriTTS

clean-100(247 speakers, 30k unsegmented lines, don't have an idea about duration yet or final line count).I'm getting really worried that I'm going to have to dump the weights and start from scratch due to it overtraining solely from the text phonemes itself. From evaluation output, I had something sourced from SA2 Knuckles outputted with SA2 Rouge's voice, and the only explanation I have for it is that it's overtraining on the text phonemes itself.

iunno, I'm probably just overreacting over a flaw, but I either need to take the loss and dump three weeks of training or risk it getting worse and having to dump it later in the line. I honestly don't remember how long it did take for it to even get to something with a semblance of speech with a tiny dataset, so that's probably the only reason I'm against dumping it, since even bad weights are better than no weights.

@mrq

Is the size the general concern atm?

Would you rather have more clean game data?

If so, try https://www.sounds-resource.com/ . It has extracted audio assets from proabably 70% of games you could think of,which means all clean. And it is sorted by language, character. If it meets your standards, it probably has more than you could use.

For a more specific example, look at one like https://www.sounds-resource.com/xbox/cars/

Check out lightning mcqueens voice pack. Most of the packs will be organized as such (and as you can see, each game should have a decent abundance.)

If you are going to use LibriTTS then you should also check out HiFi-TTS which is a smaller but higher quality (sampled at 44.1 kHz) dataset as that's what tortoise also uses in addition to LibriTTS since that might be better for higher quality output.

also if you were going to train a multi-lingural model like Valle-X than this has a lot of dataset for various different languages.

Twice I had what I was going to say eaten away. I already spent maybe 45 minutes to an hour, so I'm keeping it as brief as I can. Apologies if it comes off rather curt.

Yes, in an odd way.

My concern for the "old" data not being up to par with the new one seems to be moreso from those speakers' line counts being much bigger than the line counts the "new" data. I'm not sure where the fix for it lies, so I'm just going to ignore it for now.

Already have.

Yeesh.

I'll keep it in mind. At that point though, I might as well dump in more LibreTTS.

Training with the

clean-100portion seems to be doing fine; it didn't outright decimate my loss/accuracy metrics, so I guess the model itself is in good standings with not overfitting. The evaluation output even at an epoch doesn't seem completely terrible; definite room for improvement, but it at least is trying.Sort of off-topic but Microsoft just published Natural Speech 2 which seems to be a significant improvement over VALLE architecture. A short skim through of the paper it seems to be a latent diffusion model which might make it slower than VALLE(?). It also seems that zero-shot prompting would be much easier and better since it only require audio and tortoise like 11Labs.

The biggest innovation in this paper is that they use a continuous vector audio codec compared to discrete tokens.

It seems to be simpler since the diffusion model replaces the two stage approach of VALLE. It also can do singing (though not as natural as regular speech) which is pretty neat (though it needs to be trained with singing in its dataset obviously).

It probably be awhile before any good open-source reproduction will come out like VALLE is right now but it seems useful to an eye on it for now :)

https://speechresearch.github.io/naturalspeech2/

though lucidrain already started with his pytorch implementation because he's insane lol

mmm, yeah I definitely won't try and tackle that. I'll let the real experts deal with maturing it, and hopefully someone with the actual compute to play around with it and homebrewing a model.

From a cursory glance at the paper, it does seem to address my "concerns" I had with VALL-E with it's "haha lets throw some quantized waveforms at it and see how it learns from it" approach that makes VALL-E, in a hyper-reductionist manner, a sophisticated MIDI synthesizer.

However, the more I look at the paper, the more turned off I feel about it.

It's reintroducing the problems I did have with TorToiSe with more moving parts, still relying on conditioning latents (or at least, an analog to it). Now there has to be a model for encoding phonemes AND the pitch/duration predictor AND the speech prompt encoder. Yeesh. Not to mention the paper says the sourced audio is sampled at 16KHz. I understand the intent, as it effectively serves as an inherent way to squash out any unwanted sound from the waveform by narrowing the bandwidth, but it's still a quality drop somewhere, which I feel is a bit of what TorToiSe suffers from too. Relying on latent vectors instead of the input waveform also pretty much erases any hope for voices with intentional quirks like SHODAN or GLaDOS from being reproduced with it. VALL-E at least has that saving grace from working on the actual waveform itself, and can reproduce all acoustic conditions.

The training dataset seems to leave a lot to be desired too. The paper mentions the dataset is 44K hours, which at first seemed like it just means the new method is just that more efficient, but later the paper mentions

"our model is still underfitting and longer training will result in better performance". Like, they mention that a large, large dataset is practically necessary for good TTS, but they just don't quite do that.The demo also leaves a lot to be desired. At first, it sounds better than VALL-E, as VALL-E has that slight quantize crust that I'm all too familiar with. But, I checked back with the original demo page, and that crust is missing. It's funny, since the paper mentions they

"directly collect some audio samples from its demo page for comparison". Ignoring that, the speech seems rather stilted for it being "natural".I'll give it to the singing, though. While I'm sure VALL-E could reproduce singing of some kind (with what I imagine is better annotating the input text), but currently it doesn't, and for all I know it might very well not be able to. But, I think if anyone wants something that sings, they'd just use a VITS solution, at least from all the prattling I've heard about it in passing.

iunno, I'm trying not to be a hater, and it definitely is neat seeing that in the span of what, a few months from VALL-E, and much shorter from VALL-E X, there's already a contender to replace it. I'm sure the lucidrains implementation will accomplish something, especially seeing it's sponsored, and I'll definitely play around with it if something realizes from it.

But, my impressions of it so far are just... flaccid, and at that point I'd just use TorToiSe over it.

In other news, I don't have much of a progress update. Training seems to need at least another week at this rate. It's dawning more and more on me that it might take a really long time to train the model until it gets something adequate, and the temptation to just rent something like an 8x4090 machine is creeping up on me, I think for like $6/hr. I think my only setback (besides the obvious inevitable money pit) is that I already kind of forgot the exact procedure to get training under a docker container working, and I can't be assed to play around with docker files first.

Since I don't really got anywhere else to mention it, I think I squashed the error 500 bugs. I'm not sure why it happened recently, but fuck SystemD. I had to use

coreadmin my global zone to disable core dumping, which coredumps never ever matter for me anyways.In quasi-related news, I'm leveraging LibriTTS's

test-cleandataset to serve as an actual validation dataset, to gauge how well the model is at generalizing speech (the crux of zero-shot). I should have done it much, much earlier, to better gauge how things are going over time, but oh well. Training this monster of a batch is currently at iteration 19915, epoch 53ish, so I got probably a half-week left before deciding when to add more data in. I might just cave and dump theclean-360dataset into it then, iunno.Just moreso wanted to mention the error 500 issue being resolved, hopefully.

I do have this, I suppose as a very rough zero-shot test: output / reference

It's kind of cute in a weird way seeing it try and speak. It's definitely getting there, but a lot of the other validation output leaves a lot to be desired.

Bit the bullet yesterday; transcribed the

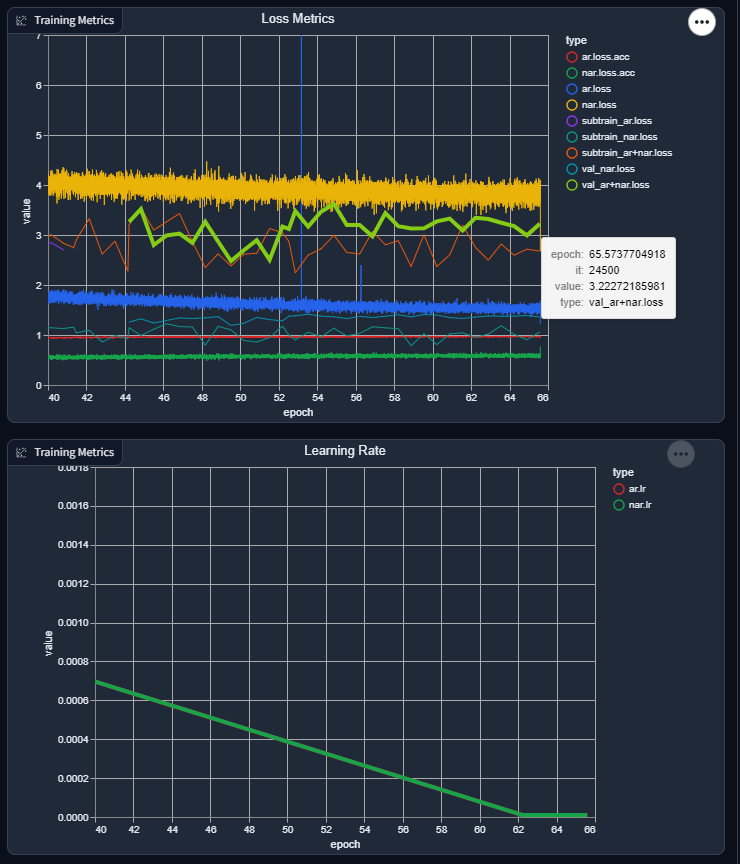

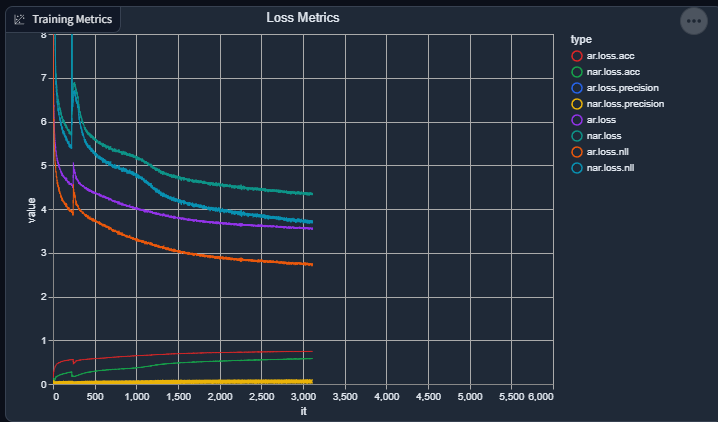

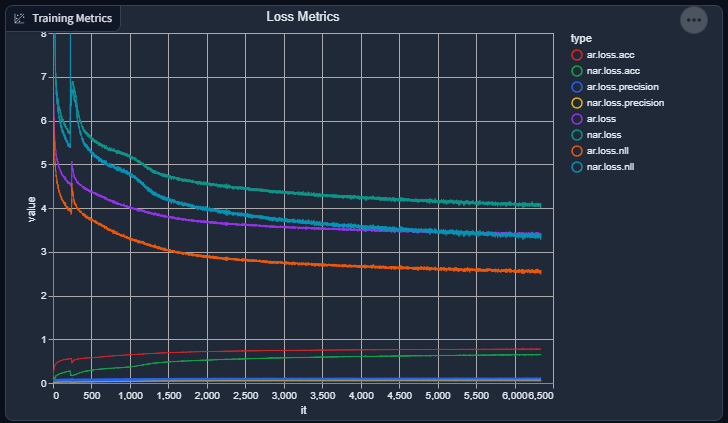

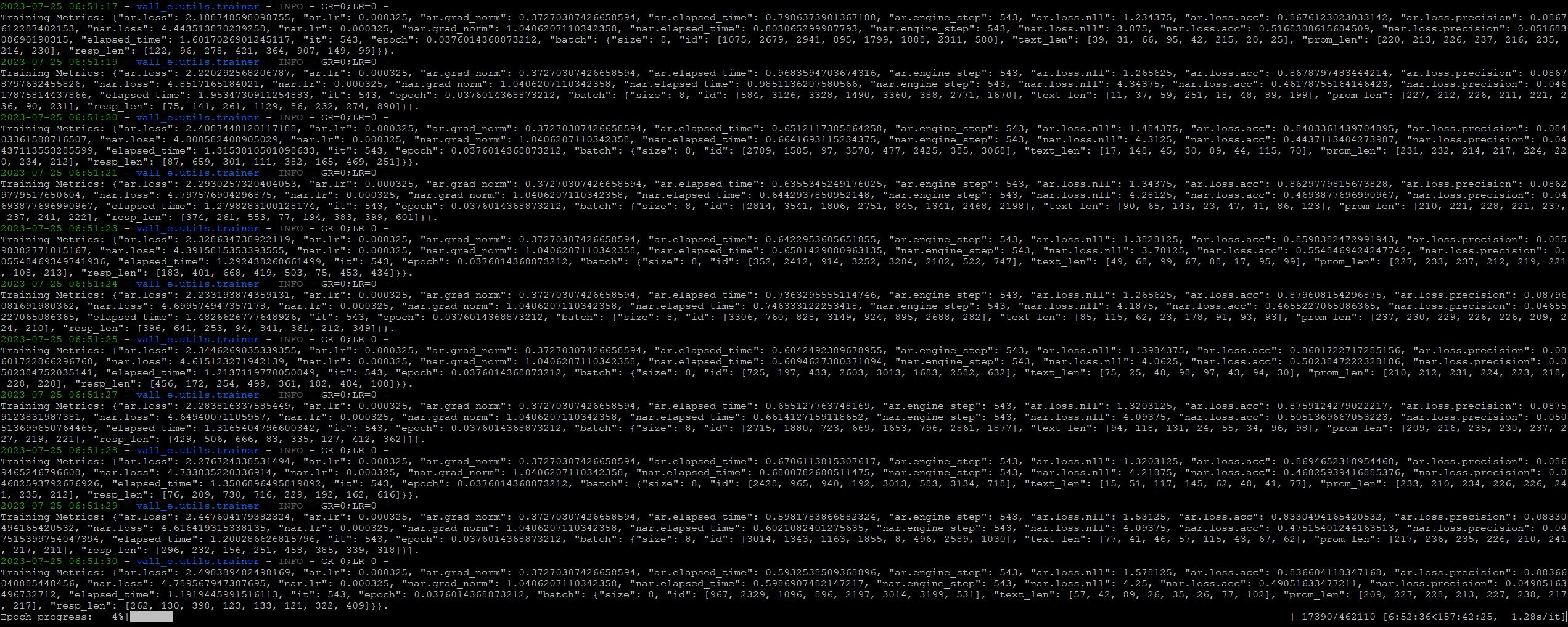

train-360LibriTTS (sub)dataset, putting me at a total of 116 hours, 167520 lines (total, actual dataset could be more, but I dropped Westwood's Blade Runner and FFXII lines since I felt they weren't really worth training against for the quality they were at).I'm starting to be at wit's end, though. The metrics towards the end of the last batch stagnated, and the current batch seems pretty stagnated too, even with a new LR restart, so I don't know. I'll have to keep an eye on it for a few days, but I'm worried that no amount of more training and data will help.

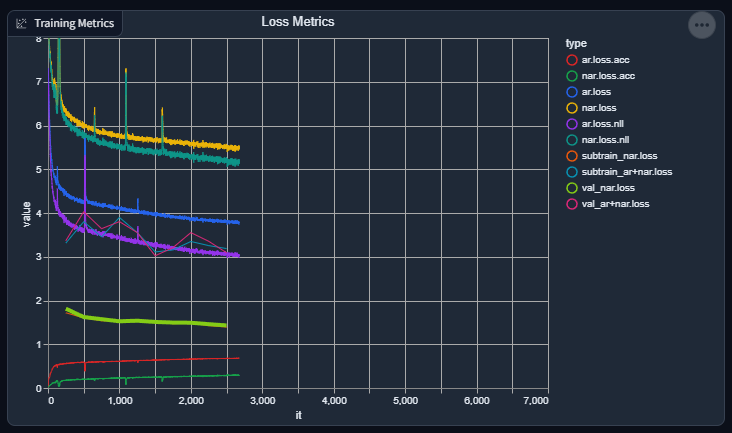

End of the last batch:

Current progress with the new batch:

Have you seen https://github.com/Fictiverse/bark? The singing is pretty neat, and the inference time is quite fast.

Seen it. I mentioned some thoughts here, but I'll mention my current thoughts:

The last one is my biggest problem. desu I shouldn't really bother with it right now if it can't do unrestricted voice cloning (or at least, bothering trying to cobble together a way to provide your own voice

.npzs)As a slight progress update, I might have fucked up by setting my de-facto batch size (bs=16, ga=16). I have a hunch that I started getting worse results from training after I optimized the VRAM usage and increasing my settings from bs=4, ga=4. Can't really make much conclusions right now, as I just need to wait and see before making more judgments on if it works or not.

Although, I'm tempted to try the quarter sized models again. Technically I think they can be fine, since I think I fixed it outputting the combined AR+NAR audio after I gave up on it, and it'd be much, much faster to train.

In case I keel over and die or go AWOL, I'm putting my current progress and dataset onto a HuggingFace dataset repo. It'll also help me whenever I finally cave and rent out an actual machine to train this bitch on.

Also, the site should be actually fixed now. I migrated the gitea setup from an LX-brand Ubuntu zone into a normal SmartOS VM (because SystemD fucking sucks and won't tell me what's wrong), and I was able to narrow down it was

1040: Too Many Connectionsissue from using a neglected SQL VM.Apologies for it being down however many times, I guess the increase in traffic caused issues. I'm not sure why though, as I have a MediaWiki on the same machine but in a different VM that gets 5x the traffic here and it hasn't given me these issues.

Oh, actually, there is a repo for it: https://github.com/serp-ai/bark-with-voice-clone

I'll play around with it. If it proves favorable then I guess I won't need VALL-E.

I tried that fork and I the voice replication is comparable to using the non-finetuned custom voice of Tortoise in that it kind of replicate the voice of characters but it doesn't do well with anything outside of audiobook type voices... still pretty neat at least.

I whipped up a small script to play around with it and, while I had zero hitches actually getting it to run (which in post I guess I got lucky, apparently people have had it not work given the issues).

Terrible results. I tried a segment of SA2 Knuckles I already cracked out and the result is unusable. I also used a default provided speaker voice and it's mostly unusable. I'm not sure if it's related to using the small models (as this was running on my 2060, the 4070TI is still training) or not, but I might take a crack at it later with the non-small model.

If it's something inherently wrong with the repo, then at least I got an idea on generating the .npz speaker files, and the code for that can live in this repo.

I suppose I'll still add it in. I have an idea on how I would extend backbends, so if anything it'll be for that.

mrq it's really sad that you are the entire hope for the open source TTS community right now and you are using a 4070. If you open a patreon, I'll donate $50 towards your compute costs and I think some others would too.

AIVC has Bark integration. I don't really need to use any of the forks, as:

Relying on the main repo just seems better, as I don't have to wait for a fork maintainer to merge upstream commits.

It's extremely kludgy, as it requires your voices to already be transcribed with Whisper in order to use them (because generating speaker files requires a text transcription anyways). Output sounds puke at best and dogshit at worse, so I don't actually think it should be used.

But if you do want to toy with it:

git clone https://github.com/suno-ai/bark ./modules/barkpip3 install -e ./modules/barkstart.sh --tts-backend='bark'This way is required because I don't have a way to inject speaker prompt paths anywhere outside of the default one, and this way will keep some uniformity between OS's (implying that I have tested this on Windows, much less, expect it to work under Windows, much less, care if it does at the moment). This also implies DirectML won't get any love, it seems bark loves to use flags like

use_gpu=Truerather thandevice='cuda'.A ton of settings aren't use, the temperature slider works for both

text_tempandwaveform_temp, because I can't be assed to modify the existinggeneration_proxyfunction on the web UI side. You are required to have already transcribed your target voice in the web UI. When generating, it'll pick a random transcription to use as the source. I do not have a convenient way to "select" a prompt.'I figured I might as well get it added in and wait for things to mature. This will not replace TorToiSe, like, at all.

Nah, I'm just being both stingy and stubborn. Money isn't an issue, as it hasn't been for several of my endeavors. I also refuse to spend any more money on Ngreedia cards, much less, another GPU (I'm still stuck with a 2060, two 6800XTs, and now this 4070Ti I'm feeling some remorse for).

I'll be fine.

I had a pretty lengthy progress report (despite framing it as brief), but I felt it was much ado about nothing, and might have painted too high of an expectation that I keep forgetting that I make and forget that I break. Anyways:

The above changes has me not stressing so much about training now. I just need to remember to stop making the same mistakes again and again.

And when this run is over (I am not making any promises):

iunno, Bark sounding so terrible seems to put more pressure on getting this model trained. I don't know how it can sound so bad. Even the output for the past month of training at least maintained some semblance of the source. But Bark didn't sound anything like the demos.

Again, I'd like to consider contributing some money towards the cloud compute costs if possible. Opening a patreon would be good.

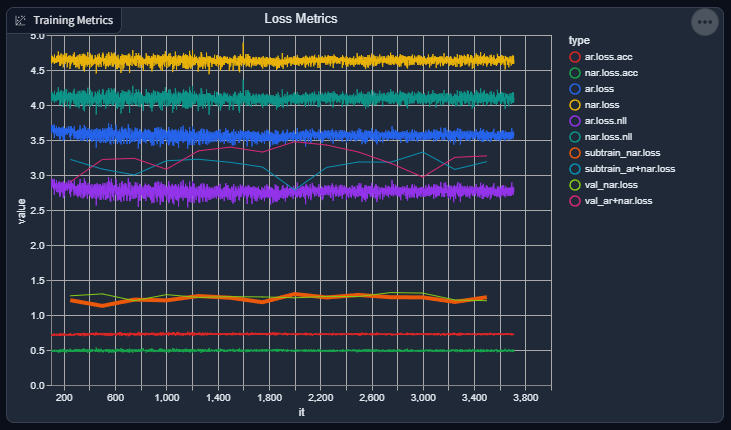

Slight progress report again: things are going swimmingly again. Losses are going down (not as fast as apparent as I wish, but still going down), and accuracies are going up (again, not as fast as I wish).

I suppose, given how the evaluation / validation output consistently sounds, it's doing a great job at replicating the acoustic "environment" of a speaker (good), but still has a lot more to go in order to consistently synthesize speech.