Stable Diffusion web UI (with a DirectML patch)

| .github/ISSUE_TEMPLATE | ||

| ESRGAN | ||

| javascript | ||

| models | ||

| modules | ||

| scripts | ||

| .gitignore | ||

| artists.csv | ||

| environment-wsl2.yaml | ||

| launch.py | ||

| README.md | ||

| requirements_versions.txt | ||

| requirements.txt | ||

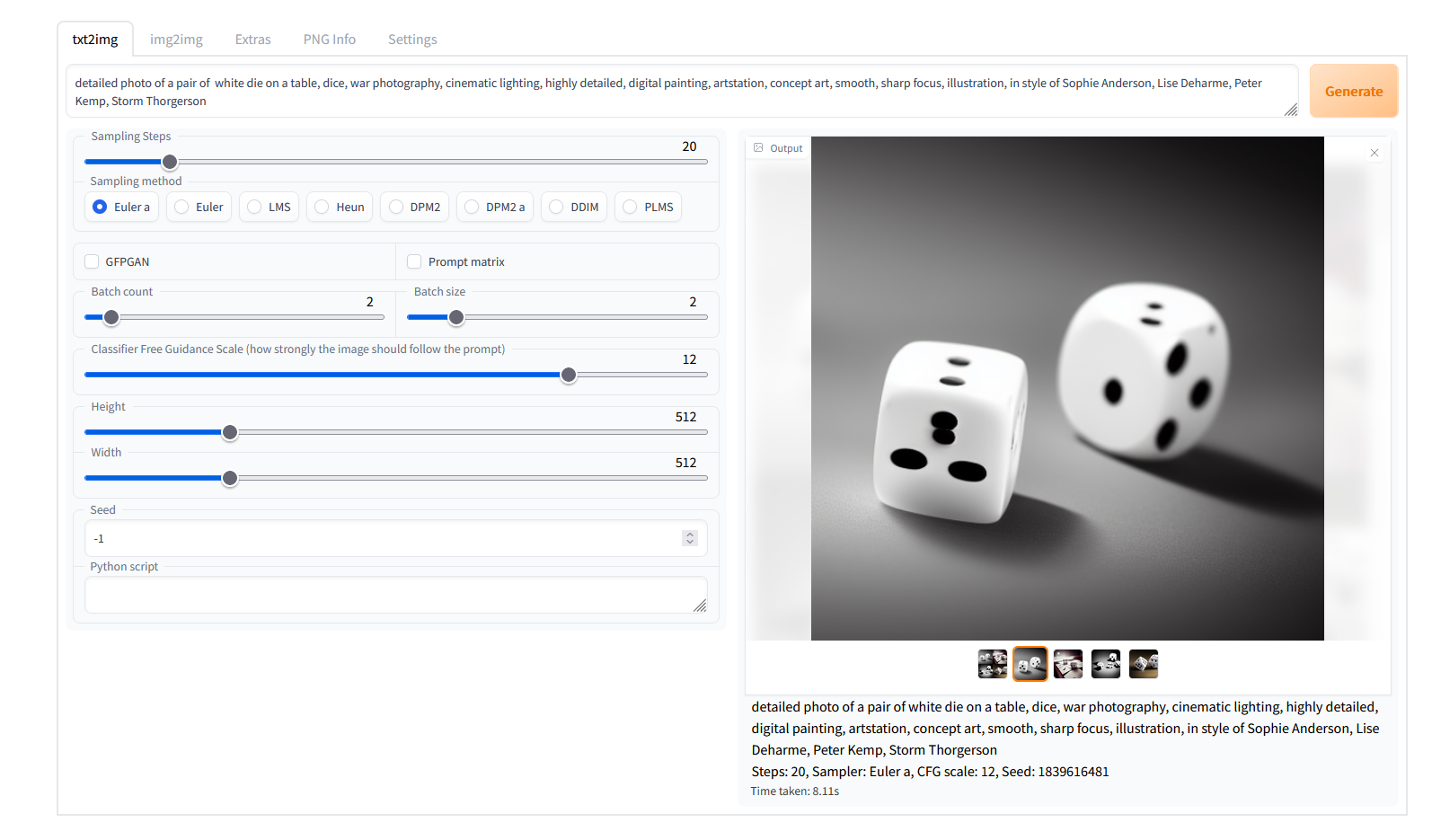

| screenshot.png | ||

| script.js | ||

| style.css | ||

| webui-user.bat | ||

| webui-user.sh | ||

| webui.bat | ||

| webui.py | ||

| webui.sh | ||

Stable Diffusion web UI

A browser interface based on Gradio library for Stable Diffusion.

Features

Detailed feature showcase with images:

- Original txt2img and img2img modes

- One click install and run script (but you still must install python and git)

- Outpainting

- Inpainting

- Prompt matrix

- Stable Diffusion upscale

- Attention

- Loopback

- X/Y plot

- Textual Inversion

- Extras tab with:

- GFPGAN, neural network that fixes faces

- CodeFormer, face restoration tool as an alternative to GFPGAN

- RealESRGAN, neural network upscaler

- ESRGAN, neural network with a lot of third party models

- Resizing aspect ratio options

- Sampling method selection

- Interrupt processing at any time

- 4GB video card support

- Correct seeds for batches

- Prompt length validation

- Generation parameters added as text to PNG

- Tab to view an existing picture's generation parameters

- Settings page

- Running custom code from UI

- Mouseover hints for most UI elements

- Possible to change defaults/mix/max/step values for UI elements via text config

- Random artist button

- Tiling support: UI checkbox to create images that can be tiled like textures

- Progress bar and live image generation preview

- Negative prompt

- Styles

- Variations

- Seed resizing

- CLIP interrogator

- Prompt Editing

Installation and Running

Make sure the required dependencies are met and follow the instructions available for both NVidia (recommended) and AMD GPUs.

Alternatively, use Google Colab.

Automatic Installation on Windows

- Install Python 3.10.6, checking "Add Python to PATH"

- Install git.

- Download the stable-diffusion-webui repository, for example by running

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git. - Place

model.ckptin themodelsdirectory (see dependencies for where to get it). - (Optional) Place

GFPGANv1.4.pthin the base directory, alongsidewebui.py(see dependencies for where to get it). - Run

webui-user.batfrom Windows Explorer as normal, non-administrator, user.

Automatic Installation on Linux

- Install the dependencies:

# Debian-based:

sudo apt install wget git python3 python3-venv

# Red Hat-based:

sudo dnf install wget git python3

# Arch-based:

sudo pacman -S wget git python3

- To install in

/home/$(whoami)/stable-diffusion-webui/, run:

bash <(wget -qO- https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui/master/webui.sh)

Documentation

The documentation was moved from this README over to the project's wiki.

Credits

- Stable Diffusion - https://github.com/CompVis/stable-diffusion, https://github.com/CompVis/taming-transformers

- k-diffusion - https://github.com/crowsonkb/k-diffusion.git

- GFPGAN - https://github.com/TencentARC/GFPGAN.git

- ESRGAN - https://github.com/xinntao/ESRGAN

- Ideas for optimizations - https://github.com/basujindal/stable-diffusion

- Doggettx - Cross Attention layer optimization - https://github.com/Doggettx/stable-diffusion, original idea for prompt editing.

- Idea for SD upscale - https://github.com/jquesnelle/txt2imghd

- Noise generation for outpainting mk2 - https://github.com/parlance-zz/g-diffuser-bot

- CLIP interrogator idea and borrowing some code - https://github.com/pharmapsychotic/clip-interrogator

- Initial Gradio script - posted on 4chan by an Anonymous user. Thank you Anonymous user.

- (You)