An unofficial PyTorch implementation of VALL-E

| data | ||

| docs | ||

| scripts | ||

| vall_e | ||

| .gitignore | ||

| LICENSE | ||

| README.md | ||

| setup.py | ||

| vall-e.png | ||

VALL'E

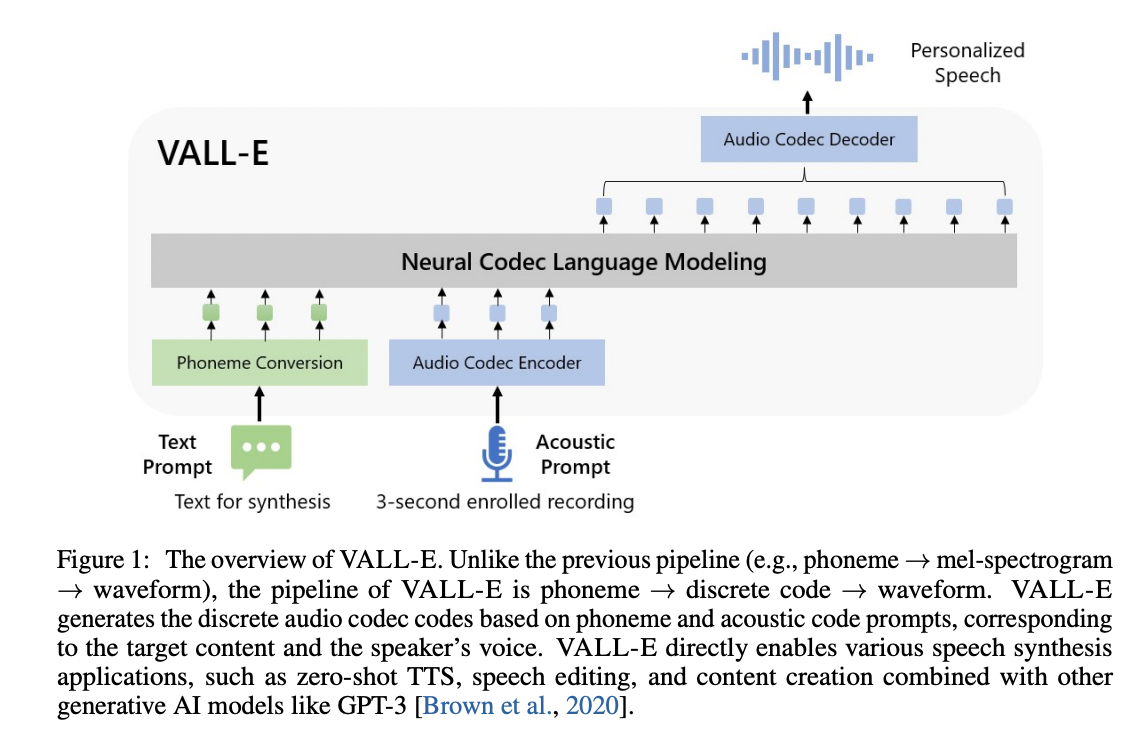

An unofficial PyTorch implementation of VALL-E, utilizing the EnCodec encoder/decoder.

A demo is available on HuggingFace here.

Requirements

Besides a working PyTorch environment, the only hard requirement is espeak-ng for phonemizing text:

- Linux users can consult their package managers on installing

espeak/espeak-ng. - Windows users are required to install

espeak-ng.- additionally, you may be required to set the

PHONEMIZER_ESPEAK_LIBRARYenvironment variable to specify the path tolibespeak-ng.dll.

- additionally, you may be required to set the

- In the future, an internal homebrew to replace this would be fantastic.

Install

Simply run pip install git+https://git.ecker.tech/mrq/vall-e or pip install git+https://github.com/e-c-k-e-r/vall-e.

This repo is tested under Python versions 3.10.9, 3.11.3, and 3.12.3.

Pre-Trained Model

Pre-trained weights can be acquired from

- here or automatically when either inferencing or running the web UI.

./scripts/setup.sh, a script to setup a proper environment and download the weights. This will also automatically create avenv.- when inferencing, either through the web UI or CLI, if no model is passed, the default model will download automatically instead, and should automatically update.

Documentation

The provided documentation under ./docs/ should provide thorough coverage over most, if not all, of this project.

Markdown files should correspond directly to their respective file or folder under ./vall_e/.